If last quarter was defined by ever-cheaper, ever-faster frontier models, this week was about who controls the data (and the people) that fuels them:

Atlassian, Notion, Slack, and Cloudflare each tightened or priced the very endpoints that power cross-app search and agents, reminding builders that context has a landlord.

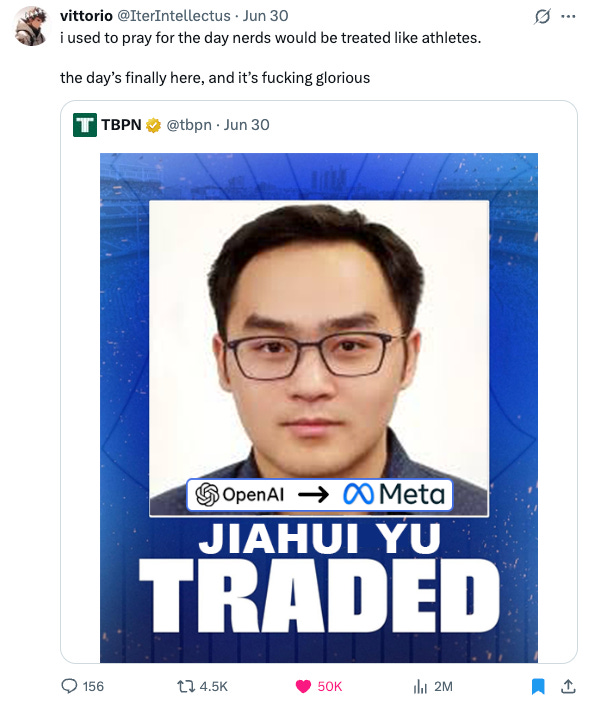

Meanwhile, Meta opened its checkbook and hoovered up a starting lineup of OpenAI, DeepMind, and Anthropic researchers. The pay packages are sized like NBA contracts… and pleeeenty of hurt feelings to match.

In parallel, agent tooling kept marching forward: Cursor pushed agents into the browser, Apple is trial-running Claude, and both OpenAI and Anthropic shipped hooks and web-services that turn chat into accountable workflows.

TL;DR: Access – whether to data, people, tooling, or compute – is now the defining construct.

1️⃣ AI Researchers as NBA Players

REVENGE OF THE NERDS!

Okay, there are just SO MANY great memes here. Has there ever been a more glorious time to be a bona fide nerd? Meta has gone on an insane poaching spree, reportedly offering equally insane pay packages, and the list is impressive.

Here is the list of who is joining Alexandr Wang & Nat Friedman at Meta’s new Superintelligence lab:

And in case you needed a handy card to reference, another banger from TBPN:

The vibes are really NBA-ish! From Sarah Guo, I LOL’ed:

Okay in all seriousness, here is the recap: As you may recall from prior posts, Meta’s new “superintelligence” group has been dangling eight-figure packages to lure senior researchers from OpenAI. Sam said that no one had bit, but turns out… many did! I mean, look at that pay package: 🔥🔥🔥

The response inside OpenAI was visceral this week - leadership likened it to “someone breaking into our home” and promised compensation recalibration together with a renewed focus on mission over money. Sam in particular seems to feel very salty about it and said “missionaries will beat mercenaries.”

Which I get, but also, my dude… talent ain’t cheap! Also kind of rude to say you can only be a missionary at OpenAI. Let’s not forget Meta has been carrying the open source AI movement on its back. Who’s the real missionary here?

Anyways, let’s see what this superintelligence thing is all about and how connected it will be to Llama / open-source vs. not. In the meantime, I’ll leave you with one more great post:

2️⃣ Corporate data access fights escalate

Enterprise vendors are tightening API policies just as AI search and agent platforms need richer context:

Atlassian and Notion are throttling or reevaluating access for Glean and similar tools, according to reporting in The Information. Atlassian began rate-limiting Glean in February after an earlier (unsuccessful) acquisition offer; Notion is reviewing its developer terms now and “plans to adjust rate limits to balance for demand and reliability.”

Slack (Salesforce) lowered rate limits on key history endpoints and now bars long-term storage of messages by non-Marketplace apps, directly cutting off the stream many AI search vendors rely on.

Cloudflare flipped the default: new sites on its network now block known AI crawlers such as OpenAI’s GPTBot unless given explicit permission, and the company opened a “Pay Per Crawl” marketplace so publishers can charge per request.

The common theme is control: platforms want to keep high-value content and telemetry inside their own ecosystems while they build competing AI features.

🚨🚨 Very important note for builders:

Startups that depend on unrestricted APIs should plan for rate-limit tiers, paid access, or partner agreements sooner rather than later.

3️⃣ Apple’s dilemma: “Rent a brain, or fall behind’

In a move that would’ve shocked me 2 years ago given Apple’s penchant to remain extremely closed source, but that shocks literally no one today given Apple Intelligence is MIA, Bloomberg reports Apple is testing Claude and ChatGPT to power Siri, after repeated delays to its internal LLM. Talks are reportedly tense: how much of Siri’s future to outsource, and on what economics?

Watch for:

Apple’s traditional “vertical stack” ethos running head-first into the pace of external model improvements.

Whether Apple can land a deal for custom Claude/GPT checkpoints that run inside its own “Private Cloud Compute,” letting it keep the privacy halo even if the brains come from elsewhere.

Speaking of Apple’s PCC, a shameless portfolio company plug that if you’re an enterprise that cares about provably private AI, def check out Confident Security!

Meanwhile, you can already play this in the betting markets:

4️⃣New primitives for doing work, not just chatting

Anthropic Claude Code Hooks. Hooks feature is live! Deterministic shell hooks fire at key lifecycle events – format, log, enforce policy – finally giving teams guardrails instead of hoping the LLM “remembers.” A cool livestream on Hooks here.

OpenAI Deep Research & Webhooks. Two new API tiers (o3-deep-research and o4-mini-deep-research) plus webhooks let you kick off a multi-step research job and simply wait for the callback. Obvious opening for verticalized “research wrappers” with curated sources and bespoke UIs.

Why this matters: These releases turn LLMs from chatty helpers into first-class, auditable services: you can queue long-running research jobs, receive signed callbacks, attach policy-enforcing hooks at every step, and wire the whole flow straight into CI, SecOps, or analytics pipelines. In short, they make “AI in production” something your existing toolchain can monitor, version-control, and trust. No more hoping the model remembers the rules!!

5️⃣ Cursor breaks out of the IDE

Cursor’s web and mobile launch lets its background coding agents run tasks while you’re away, surface diffs in a browser, and nudge teammates in Slack. In short, “agent-as-service” instead of “agent-in-editor.”

Builder takeaways:

Frictionless entry points (web, Slack, phone) > pure desktop integration.

Shared PR links make agent output reviewable – good for the rising class of security-conscious enterprises that won’t let code touch main sight unseen.

6️⃣ Long weekend reading ahead of the 4th!

I neglected to mention the Bond AI deck (a Mary Meeker classic) from a few weeks back, and given Iconiq released a mega state of AI survey, figured I’d share both!

ICONIQ’s 2025 State of AI: 80% of AI-native companies are already betting on agentic workflows; budgets are up, but cost discipline is getting sharper – multi-model architectures average 2.8 models per product to balance price/perf. Here for the full report.

Bond Cap (Mary Meeker) 340-slide AI deck: AI trends galore! Mary Meeker is famous for these reports. Among dozens of trends, the report flags that the “model-factory” business is already commoditising: OpenAI, Anthropic, and xAI have raised roughly $95B in capital yet only generate about ~$11B in annualised revenue, leaving the door open for leaner challengers.

Reading between the lines: The conversation will drift from “Which model scores higher?” to “Can we ship outcome-priced agents before margin pressure closes the window?”

This week’s takeaways:

Access is the new moat, and winning teams will:

Lock down your context supply: Enterprise platforms are already throttling endpoints and rolling out “pay-per-crawl” tolls – secure the API and data partnerships you depend on before the gate fully closes.

Build for quick swaps: Treat webhooks and deterministic code hooks as plumbing so you can hot-swap models or checkpoints without a rewrite when cost, quality, or policy shifts.

Budget for a blended model stack: Many products in the market already run ~3 different models each to hit the best price-performance trade-off. Worth thinking about your own stack; one way is to instrument every call and auto-route tasks to the cheapest model that still meets the SLA.

Compete on mission + velocity: Eight-figure offers move top researchers overnight; a fast shipping cadence >> growth and relevance, + a purpose they believe in, are what keep them.

Next week the pieces will move again….but that’s the fun part! Keep building. 💪💪

P.S. I could not end this week’s wrap up without talking about Soham-gate, honestly equal parts funny and dark.

It all started with this tweet:

Which quickly escalated into multiple companies noting they were currently employing Soham or had interviewed him recently!

Obviously memes abound about this. But this IS a good cautionary tale and a snapshot of how frenzied the AI hiring sprint has become. Everyone is fighting for talent; easy to move too quickly and skip references / back-channels. Don’t fall into that temptation!! Trust, but verify!

This was such a brilliant read. Literally LoL-ed at the researcher NBA analogy.

Yet another banger this week 👏🏽