Bargain Tokens, Billion-Dollar Brains

Inference went bargain-bin, talent went luxury-tag, and Apple kept polishing glass

Three moves, one theme: the gap between what silicon can do and what companies can ship is widening. OpenAI’s 80% price cut turned heavyweight inference into pocket change. Meta forked over literal billions for Alexandr Wang because the real choke point is now human judgment and feedback. And Apple – still printing cash – couldn’t get Siri out of first gear at WWDC. Cheap compute, pricey insight, stalled execution: that tension runs through every story that follows.

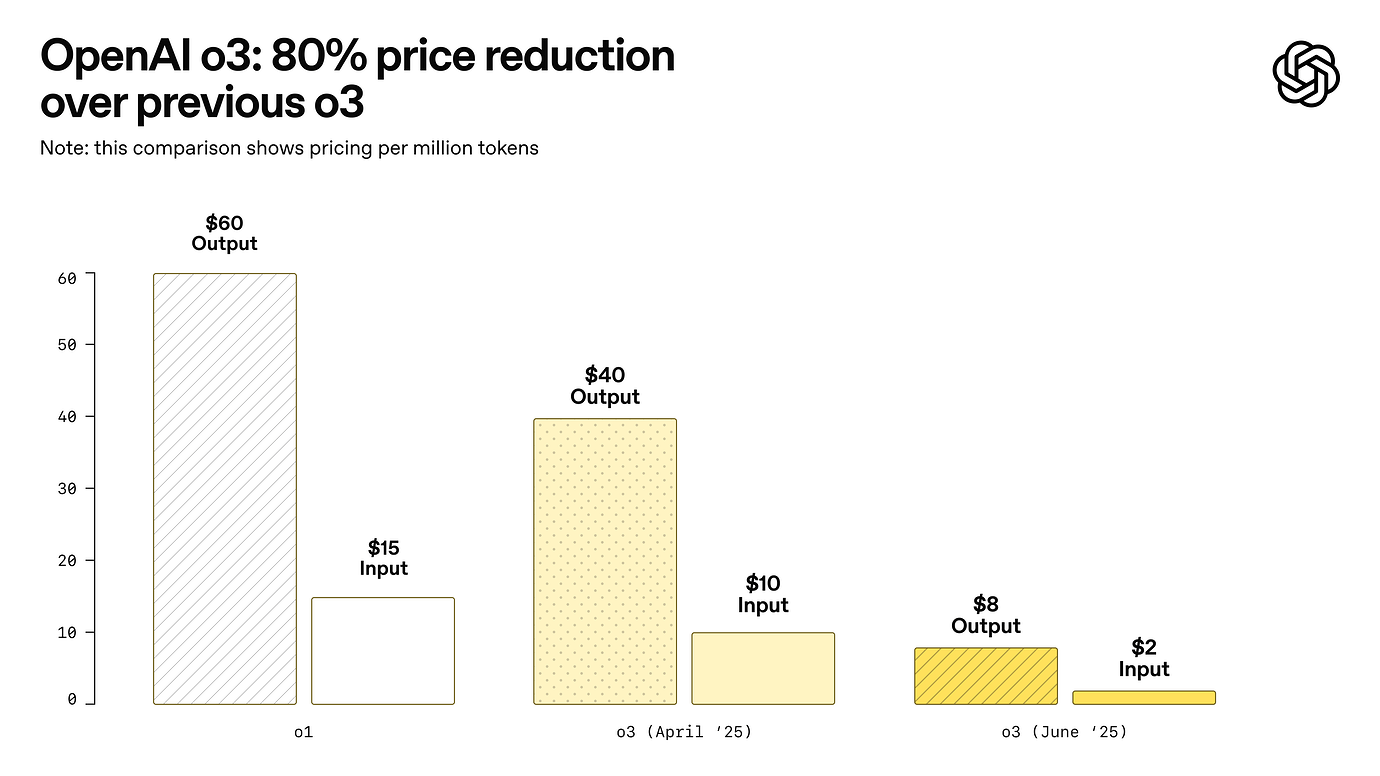

OpenAI makes reasoning (extremely!) cheap

‘Tis sale season!!! OpenAI cut o3’s API prices by 80%!!!! Wowza. ARC Prize reran its benchmarks after the change and found no drop in quality, so this isn’t a clearance sale on stale merch – it’s the same model for a fifth of the cost.

Why this matters:

Projects that once blew through a monthly budget in a day (think multi-step agents, exhaustive code review, or big retrieval jobs) now pencil out.

Road-maps should assume another price cut is coming; optimize for capability first, cost later.

If you’re pricing an AI product on “tokens consumed,” revisit your margin model this week.

o3-pro

OpenAI also introduced o3-pro this week! It is a version of OpenAI’s o3 reasoning model, and it’s designed to “think longer and provide the most reliable responses.”

I have not personally seen a lot of hard cold data on this yet or amazing examples out in the wild (save one I’ll list in a sec), but OpenAI shared some insights of their own, stating that “reviewers consistently prefer o3-pro over o3 in every tested category and especially in key domains like science, education, programming, business, and writing help.”

Below for a chart from them comparing o3-pro versus the original o3:

I tried using o3-pro this week for some projects and candidly did not feel a huge difference in output (granted, they were not for programming or scientific analysis but rather with some research work). It was, however, WAY slower than o3 (i.e. felt more like querying Deep Research than chatting with ChatGPT). So I’m not sure I’ll recommend it for any everyday tasks for now; the base o3 reasoning model is plenty great. But I will soon be testing it for extreme high-context tasks and reports! Per the below:

The best overview post testing o3-Pro I read was “God is hungry for Context” from Ben Hylak and Alexis as guests on Latent Space. What they found is when you give o3-pro a TON of context, you actually do get mind-blowing results. Read the full post for their play-by-play, below for the section that most stood out to me:

In the meantime, though, I LOL’ed at the below - the poor data center with all the trolls using o3-pro just to say “hi” or other inane stuff haha:

AI-cquihire

I think we need to create a new category for the special brand of circumventing M&A that’s been happening over the past two years in AI land. I’m calling it AI-cquihire (taking naming suggestions though):

Amazon “bought” the Adept founding team, Microsoft “bought” the Inflection founding team, Google “bought” the Character AI founding team, now Meta is essentially buying Alexandr Wang (via a 49% stake of Scale AI) for its new superintelligence lab.

It’s a whole new way to M&A!!

To be clear, in this case, a partnership with Scale does make sense beyond just the founder hire – Scale brings in industrial-scale data labeling and RLHF know-how and chops.

The other point is the below from Sarah - given Yann LeCun’s well-known skepticism of reinforcement learning, this looks like a strategic override from the business side. Expect faster post-training cycles on future Llama releases.

In other “acqui-hire” news

The Lamini team is joining AMD! (though unclear what the exact deal terms were; and what will happen to Lamini itself):

Also given all the frenzy and froth for AI researchers, Nathan Lambert (the GOAT of RLHF research!) as a (at least, I’m assuming) joke, offered to sell his blog Interconnects and go work for an organization for a cool $500M… and perhaps not surprisingly in this market, got a non-joking bite! Here is the post and subsequent reply from the cofounder and CTO of Hugging Face:

Token cost may be going way down, but their makers’ cost is going WAY up!!

Saw the below and my jaw dropped. Really crazy comp packages for AI engineers!

(For my screenshot I had to add here the funniest reply ever from the Dust team on creative fundraising given the state of these AI comp packages - i.e. have one of your cofounders take the job for a year, get $5M post tax, bring that back as fundraising for the startup 💀)

Apple’s WWDC: lots of glass, little intelligence

(Not to be crude, but doesn’t “Liquid Ass” kind of say it all? Yikes!) 😬😬

WWDC can pretty much be summarized by their “Liquid Glass” release, which is a new UI for the iPhone. Meanwhile, any meaningful Apple Intelligence releases have been pushed out (yet again).

Here’s the “so what” in my mind:

First, hardware polish only goes so far; users (aka me) know that Siri still can’t string together multi-step requests, which makes it seem laughably ancient compared to the modern AI chatbots. For the first time I’m seeing die-hard Apple users seriously consider making the Android switch.

Here is the passing Siri mention during the keynote:

"As we have shared, we are continuing our work to deliver the features that make Siri even more personal … this work needed more time to reach our high-quality bar, and we look forward to sharing more about it in the coming year."

In the coming…. YEAR???!! Big woof! I still think Apple’s privacy-first, on-device stance is a shining beacon of its AI strategy… but sadly that’s really not enough. The execution gap gives third-party assistants way more runway.

And wow, that Jony Ive acquisition by OpenAI is looking more interesting by the minute, huh!

Some other thoughts on Apple around the horn:

Vanessa is spot on 🎯 – how did no one see how disappointing it would be?

Totally agree with this comment:

Anyways, pretty much disappointments galore. The level of dodging anything that resembles interesting advances in AI was insane (come on - you’re a trillion dollar company, not lacking for resources!!). So I’ll leave you with a funny meme as it’s pretty much exactly accurate - no touching AI!

Intercom’s second act, powered by AI

Honestly one of the more inspiring business stories I’ve read in a while!

Intercom’s CEO Eoghan McCabe says Intercom’s AI agent, Fin, is pacing to $100M ARR in 2.5 quarters and grew 393 % annualized in Q1, winning 13/13 competitive bake-offs.

So what?

Outcome-based pricing (see here for an infographic showing next-gen pricing across multiple players in the space) drove new-customer NRR to 146%. Seat-based SaaS is looking old next to “we fix the issue, you pay.”

A good reminder that just because you’re an incumbent player does not mean you shouldn’t innovate or sit still. And another good reminder to startups competing with incumbents to never take things for granted and assume the large player won’t move fast and innovate!

Technical releases

Meta V-JEPA 2: A self-supervised “world model” that scores well on physical-reasoning benchmarks, useful groundwork for embodied AI and a future towards building Advanced Machine Intelligence (AMI).

Mistral Compute: Mistral launches a Europe-based cloud! Customers can access both bare metal, managed infra, and Mistral’s training suite. Also important note in the announcement re: geopolitics: “Mistral Compute aims to provide a competitive edge to entire industries and countries in Europe, the Middle East, Asia, and the entire Southern Hemisphere that have been waiting for an alternative to US or China-based cloud and AI providers”

ByteDance Seedance 1.0: A text- and image-to-video generator producing highly realistic and coherent 1080p clips, putting fresh pressure on Google Veo 3 and Runway. This week it claimed the #1 spot on the Artificial Analysis video arena leaderboard.

Mistral Magistral: Mistral’s first reasoning-focused model family, trained end-to-end with the company’s own RL pipeline and released in two sizes (

magistral-small, open weights, andmagistral-medium, commercial). It aims for transparent, verifiable chain-of-thought across professional domains and supports deep multilingual prompts.

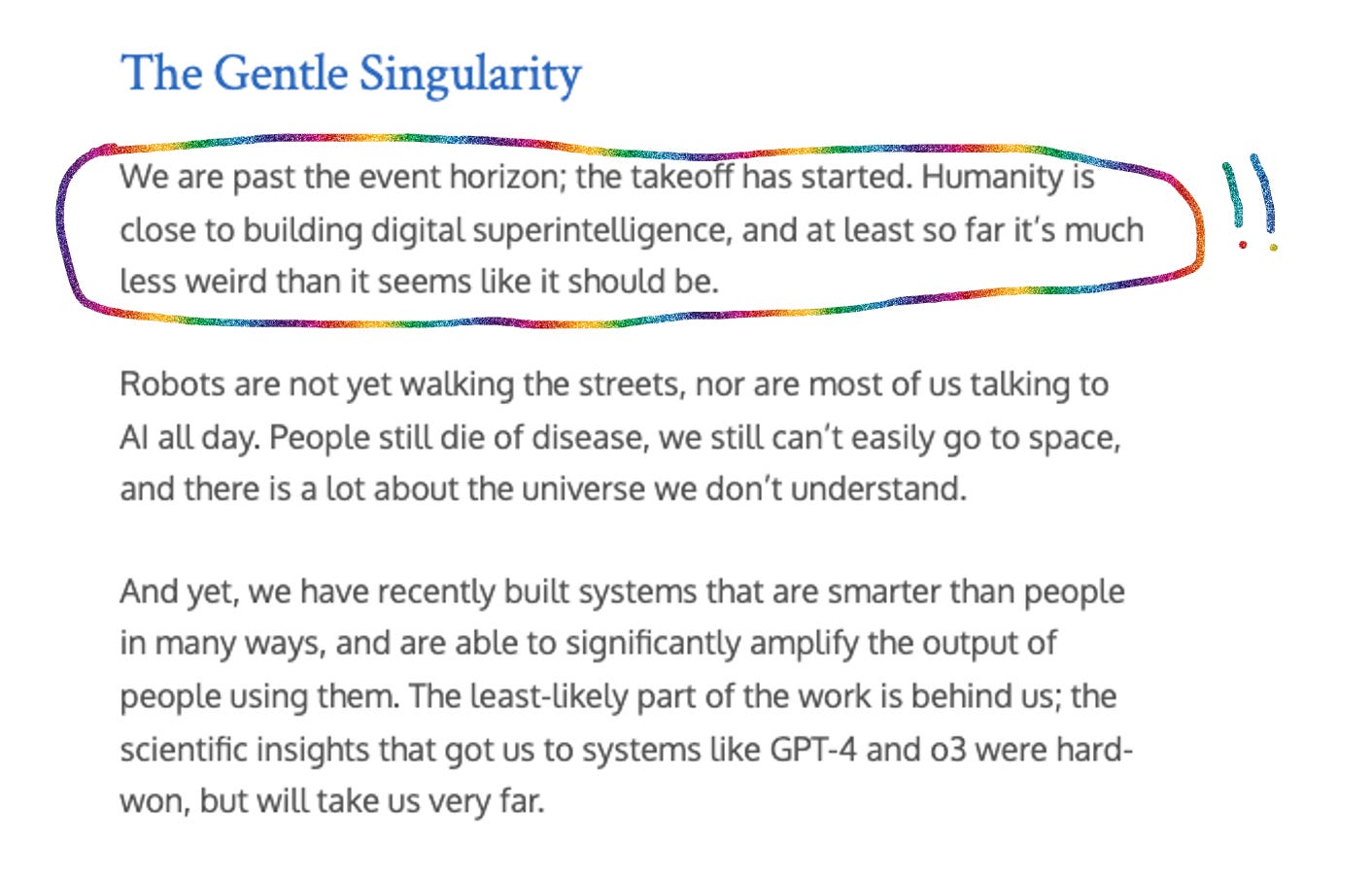

Food for Thought

The “Gentle Singularity” from Sam Altman is a worthwhile essay to read. In particular, it talks about AGI as a gradient - something I’ve believed in for some time. I think Waymo’s are a great example of this. They’re suddenly so normal we don’t think about them as exceptional, but really all of this - everything in AI over the past 2 years - is already quite exceptional. The takeoff, indeed, has started.

Wrapping up

Between plummeting token prices and ballooning AI comp packages, the ground is shifting under AI builders almost weekly. I’d keep two questions on my dashboard:

If inference were basically free, what would I build?

What proprietary signal – whether it’s data, workflow context, or domain expertise – keeps that thing defensible when everyone else can afford the same models?

Answers welcome; my DMs (as always!) are open!

— Jess