I don’t mean to sound dramatic, but sometimes, drama is warranted. OpenAI released Deep Research on Monday, and all I can say is “holy shit.” Pardon the French; my last holy shit was in 2022, when ChatGPT came out.

Sometimes, the force of technological progress hits you all at once. Yes, Google had released a research product (also named Deep Research, lol), and did any of us care? Not reeeaally. It’s not as in-depth and often defaults back to “this is how YOU could do this research” rather than doing it outright. Maybe the Google DR held some glimmers of what a powerful research assistant might look like, but IMO it wasn’t quite inspiring enough.

OpenAI’s Deep Research is something else entirely. Because it is not just about reasoning and test-time compute. The breakthrough is in both the issue of reliability (through referencing and verifying external data) and thus our trust in the model’s output, and retaining memory/coherence over a long time period while taking action.

In a blink of an AI, we have arrived to a world with truly powerful intelligence at our fingertips. Deep Research is the first time I have truly felt that AI can help orchestrate complex problem-solving. Minds have been boggled. John Hwang’s blog had some great examples of the different kinds of research it can generate.

We said 2025 was the year of agents, but it’s really shaping up to be the year of reasoning. And that realization leads me to two big ideas we need to tackle next...

The prompt engineer is dead, long live the prompt engineer

We need to start talking about labor displacement

Let’s chat lighter things first, shall we?

Deep Research brings back the importance of prompting. The subscription costs $200, which gives you access to 100 reports. $2 a report is honestly a bargain and I forked over my credit card feeling as though I got the better deal. But how mind-blowing each report will be really depends on what you’re researching, and what you prompt for. Deep Research is extremely powerful on its own, but with the right prompt wizardry it starts looking less like a cool research agent, and more like early glimmers of true superintelligence.

On that note, one of my favorite newsletters, Algorithmic Bridge, started this week’s post with the highly provocative (and clickbait-y) title: “AGI Is Already Here—It’s Just Not Evenly Distributed.” The title was somewhat misleading as they don’t actually think AGI is here (neither do I), but it makes a compelling point:

Which brings me to the human side of this AI revolution: labor displacement.

I’m definitely not an economist (or a labor market guru), so go easy on me if I skip over any intricate theories or policy details. But, since I’m basically famous among friends for having no indoor voice, I’ll happily raise the volume on the idea that we need to address labor displacement from AGI—like, yesterday.

This isn’t just about doing the right thing (though it is). It also matters for AI’s future. If a big chunk of people find themselves out of work and struggling, they might not be so thrilled to support AI progress in the hopes that AI will someday cure cancer, when they can’t afford to eat today.

Sure, I’ve heard the standard “just implement UBI!” or “don’t worry, new AI-powered jobs will show up eventually” takes. But all of this is in the long run. In the short term, we really should be figuring out ways to help people adapt—something as small as teaching every employee how to use AI as a powerful tool. Tying back to my first point, good prompting and knowledge of how to best leverage AI continues to be a differentiator to access its full potential. At the end of the day, it’s the person using the tech who holds the real power.

Anyway, I read this post on AI and the labor market last week and it’s stayed with me because it underscores how automation could outpace our ability to adapt if we’re not proactive about reskilling. It’s worth a full read, but here are the highlights that really stuck with me”

That’s enough big‐picture pondering for now. Let’s see how the AI community has already jumped on Deep Research—starting with open source efforts that popped up almost overnight.

Deep Research, but make it open source

Hugging Face’s 24 hour sprint to open source Deep Research

Over a whirlwind 24-hour sprint (#alwaysbeshipping), Hugging Face spun up an open-source alternative to OpenAI’s Deep Research—using code-based agents instead of JSON to tackle multi-step tasks. They improved the GAIA benchmark score from ~46% to 55.15%, though they note it’s an early effort and not yet full parity with Deep Research, particularly because the fully fledged web browser interaction through Operator is part of makes OpenAI’s Deep Research especially magical. Still, it’s a big step in showing how open research can power complex queries and puzzle-solving more efficiently—without locking you into a closed system.

David’s Community Launch: Introducing deep-research

David is the founder & CEO of Aomni (one of my portfolio companies, and incidentally, an AMAZING tool for sales research). David is an avid contributor to the open source community and immediately got to work, launching an open source implementation of the Deep Research agent one day after OpenAI’s came out. Github repo here.

Models be Modeling… in the app layer

While the open-source scene has been buzzing with Deep Research experiments, the big players haven’t exactly been twiddling their thumbs. Enter “agentic AI” in the app layer—new releases that could reshape how we interact with these models day to day.

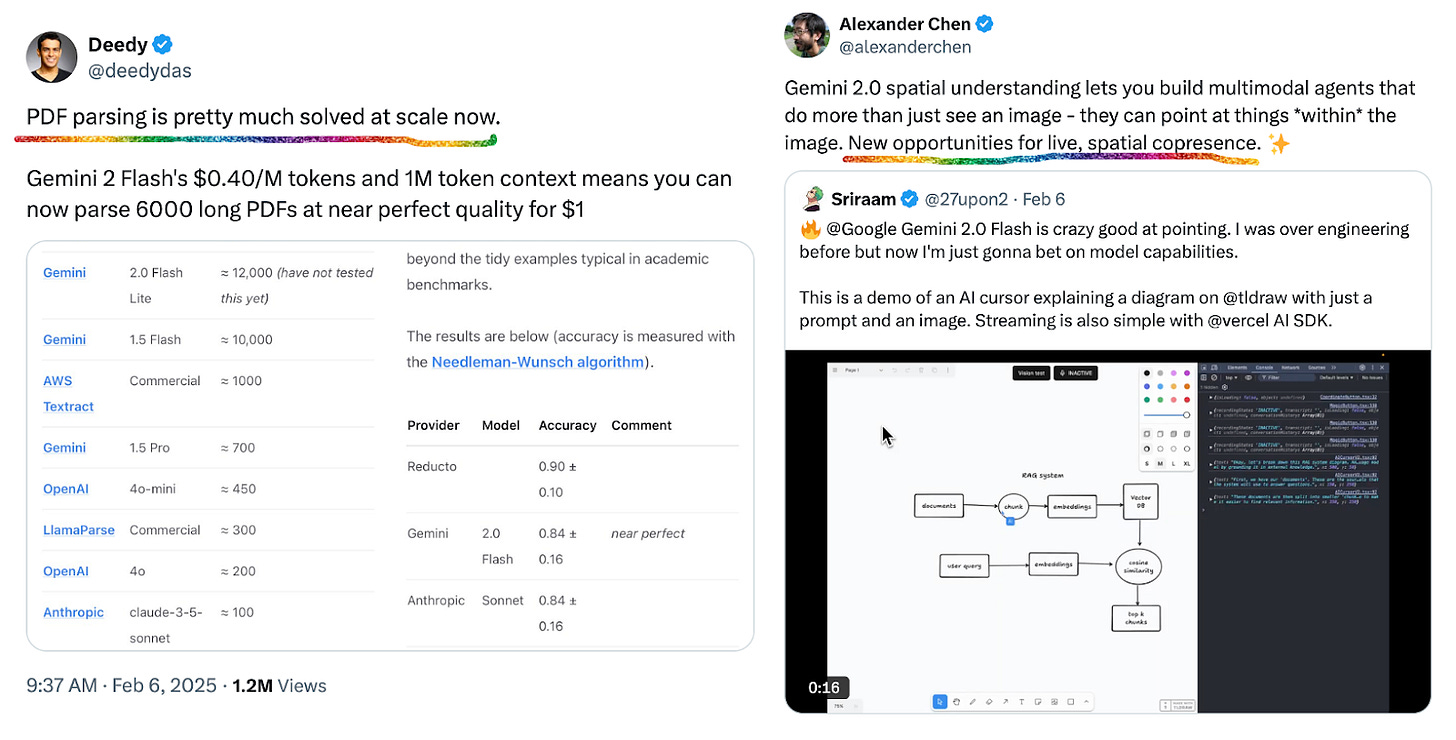

Gemini 2.0

Google’s Gemini 2.0 is finally available to everyone. It might be its biggest leap yet, with 2-million-token context windows, multi-modal input, and specialized tiers like Flash, Pro, and Lite. Key is that rather than feeling like a new model launch, this really feels like an agent / app layer launch. That’s because a lot of gemini is now “agentic AI” built for real tasks – a lot of human/computer collaboration, and the live feed & screen sharing integration is especially cool. It’s our strongest hint that specialized, high-capacity models are leaving demo la la land and shaping up to be the new normal. This thread has a great play-by-play on agentic capabilities worth exploring.

le Chat

Mistral released le Chat, an AI assistant / chatbot. You might ask yourself whether this feels very 2023. Well… it does a bit, but le Chat comes with some cool offerings: touted to be 13x faster than ChatGPT, real-time access to the web, image generation with Flux (which tends to be better than Dalle3), and importantly, free to use!

Also points us to the age old question of – infra vs. app layer – where does the value accrue?

That covers the software side. Now let’s talk about the physical world—because that’s clearly next in line for AI’s expansion.

Let’s get physical!

AI can do so much already (*scoffs*, I know that’s the understatement of the century), but it is still constrained to the digital world. The physical world comes next, and OpenAI is serious about it. We already expected robots given they are hiring for the team, but it was interesting to see OpenAI file a trademark for smart jewelry (i.e. wearables) as well. I both love it and also am slightly terrified of a future where OpenAI melds with my body and takes over my brain. (*nervous giggles*)

Now, let’s get technical!

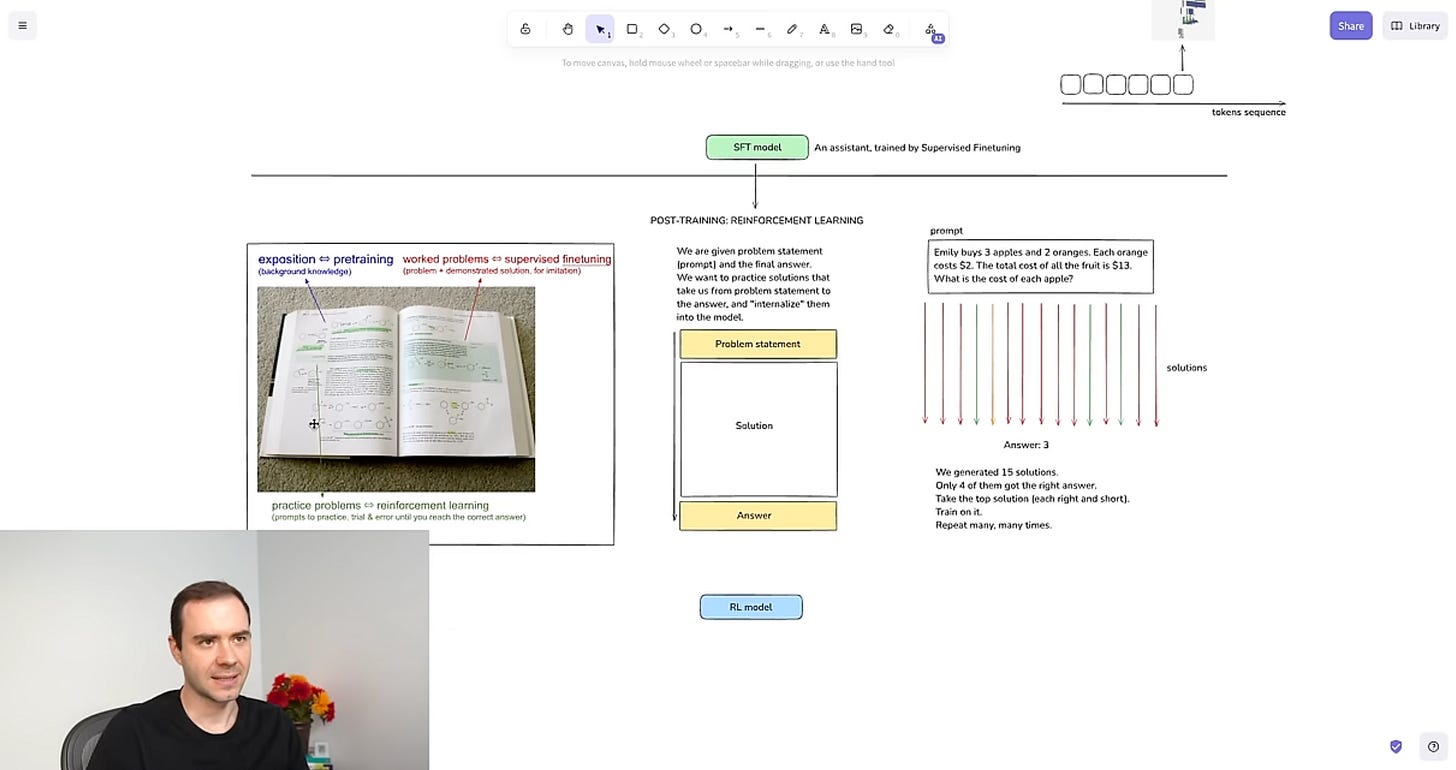

While all these new releases dominate the headlines, there’s also a fascinating deeper dive into how these models actually reason.

We often talk about chain-of-thought, prompting, fine-tuning, and “emergent behavior,” but what does it really mean when LLMs seem to “reason”? Sebastian Raschka’s latest article tackles this head-on, outlining four main strategies for building and improving reasoning models—everything from inference-time scaling to “pure” RL to RL-plus-SFT and model distillation. His key insight is that these large models can develop multi-step thinking purely through reinforcement learning, suggesting that much of what we call “emergent reasoning” may be highly advanced pattern matching plus memory. Yet even if it’s just next-token prediction at scale, the practical results are still nothing short of extraordinary. If you’re looking for a clear, technical roadmap of how LLMs gain (and refine) their reasoning abilities—along with the trade-offs in cost and performance—this is a must-read.

Image source: Sebastian Raschka

Some other great content: Andrej Karpathy released a 3 hour video breaking down how ChatGPT and other AI models work. It’s pretty awesome and covers everything from technical concepts like pre-training & post-training data, tokenization, base model inference, and memory, to more recent topics like DeepSeek & other reasoning models. It lines up nicely with Raschka’s piece on reasoning strategies, giving you both a high‐level conceptual view and a practical how‐it‐works breakdown.

Leaving you with some laughs

With all these changes happening fast, sometimes it’s good to have a laugh. Enter the forthcoming AI Bowl…

JIAN YANG IS BACK, BABY!! Epic ad. No notes.

While Perplexity isn’t running this one during the Super Bowl, you can win $1M by asking questions during it (LLM as judge?!). Also look out for ads from OpenAI and Google, and Taylor Swift in the stands. And I guess.. there’s some football going on too? :)

Til next time 👋👋