"Open" for Business: Llama 3 and the Great AI Shake-Up

Why this week's wave of closed-source model announcements made me love Llama 3 even more

The Cambrian Explosion of Open Source Models

2023 was the year of ChatGPT, but 2024 will be remembered as the open source AI surge. With the release of Meta AI's Llama 3, boasting up to 70B parameters trained on over 15 trillion tokens (and with a 400B parameter model currently in the works!) the performance gap between open and closed source models has effectively closed. Open source models are officially “open” for business!

This week happened to be a busy one for closed sourced models, though, with both OpenAI (GPT-4o) and Google (updates on Gemini, Gemma, and the full AI suite) sharing major releases for their generative AI programs. While there’s already a lot of content touting how “the game has been changed again” and a fair amount of deserved excitement over some of the new features (live language translation! Multimodal inputs! Latency improvements!), what was perhaps most remarkable was how not revolutionary both updates were.

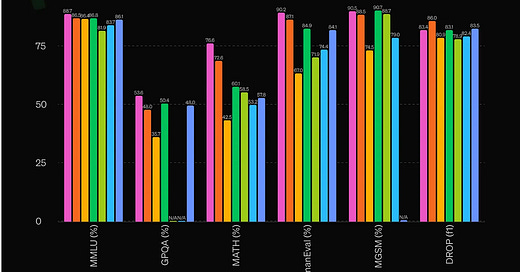

On many side-by-side evaluations, Llama 3 - 400B remains fairly comparable to GPT-4o (and beats Gemini Ultra 1.0 in several). For many developers, the cost/benefit still skews open source - and this is before any response from the Meta AI team that I am sure is already busy adding some of the newest features into their open source work. And once the 400B model is officially released later this year, its adoption will grow even further.

Source: Gary Marcus

In many ways, the closed-source announcements of the week only made Llama 3 look even more impressive. The implications of open source dominance are far-reaching:

Democratization: Advanced AI is now accessible to all developers, not just Big Tech.

Cost Disruption: Open source challenges the high inference fees of proprietary APIs.

Customization: Enterprises can modify and fine-tune open models in unprecedented ways.

Naveen Rao, VP of Generative AI at Databricks (previously co-founder/CEO of MosaicML), and one of our AI Center of Excellence founding members, said it best in his tweet:

Llama 3: A New Benchmark for Open Source AI

Llama 3 represents the state of the art in open source AI. Key features include:

Massive Scale: 8B and 70B parameters trained on 15T+ tokens across two 24K GPU clusters, with a 400B parameter model currently in training

Advanced Tokenization: 128k vocabulary for more efficient language processing

Context: 8k context window to handle more complex inputs, and other players like Gradient have already come in to extend Llama 3’s 8B context length from 8K to over 1M tokens

SFT, PPO, DPO: Sophisticated human feedback for nuanced understanding

Source: Meta AI

Llama 3 demonstrates the power of combining top AI talent with an open source approach. It sets a new standard for open models and will accelerate innovation across the AI ecosystem.

As a reminder, Llama 2 featured three model size options (7B, 13B, and 70B), and so anyone already deploying Llama 2 in production should be able to seamlessly swap in Llama 3’s 8B and 70B models to improve your product. And, if those models are not enough for the ambition of the application, there will now be an even more advanced class soon to be released at 400B parameters.

Pushing the Cost v. Performance Ratio

One of the most compelling aspects of Llama 3 is its exceptional cost-to-performance ratio. By training the models on an unprecedented 15 trillion tokens—75 times more than the compute-optimal point (based on Chinchilla scaling laws) for an 8B model—Meta AI has achieved remarkable performance gains without significantly increasing model size.

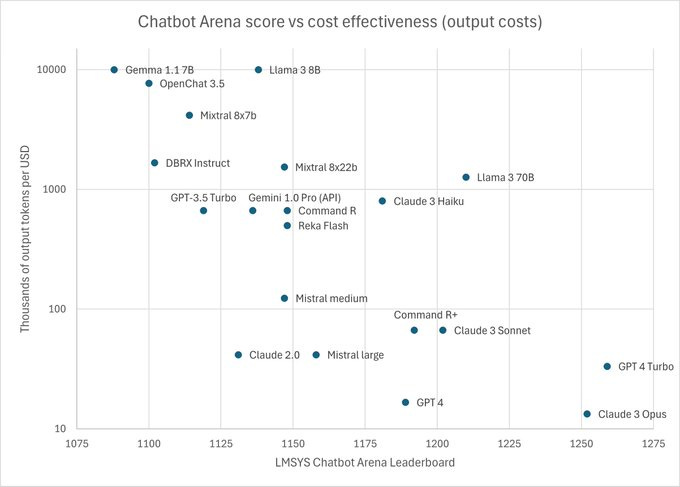

This approach challenges the prevailing wisdom that bigger is always better when it comes to language models. Instead, Llama 3 demonstrates that extensive training on high-quality data can yield state-of-the-art results even with relatively small models. The 8B Llama 3 model, for example, outperforms models many times its size on key benchmarks. And the charts below show the cost effectiveness of Llama 3 (both 8B and 70B) relative to comparable models - breaking with the convention that smarter models are necessarily more expensive.

Source: Andrew Carr

The implications for enterprises are significant. Smaller models are faster and cheaper to run, making them more practical for real-world deployment. They require less computational resources, reducing infrastructure costs. Andrej Karpathy, a founding member of OpenAI and former director of AI at Tesla, notes that they are easier to work with, as they can be deployed on a wider range of devices, from cloud servers to edge computing nodes.

As researchers continue to push the boundaries of what's possible with smaller, extensively trained models, we can expect to see even more impressive cost-to-performance ratios in the future. And even the upcoming 400B parameter model, for instance, is already encroaching on GPT-4 level performance in early benchmarks. If this performance holds up in real-world applications, it could be a game-changer for enterprises seeking GPT-4 level capabilities at a fraction of the cost.

The Future: Open and Specialized Models

The open source AI paradigm shift will drive a proliferation of specialized models. Monolithic mega-models will give way to diverse ecosystems of right-sized models tailored for specific domains and use cases. Some great examples already released are Databricks’ DBRX, Microsoft’s Phi-2, the Mistral family of models, Alibaba Cloud’s Qwen, and Snowflake’s Arctic. Startups and the largest Fortune 500 companies are pushing the boundaries of what is possible with open source, and this is really just the beginning.

This is disruption at its finest: organizations are spending millions of dollars to offer state-of-the-art generative AI models for free. Enterprises will then orchestrate these models to build customized AI solutions, with proprietary data as the key differentiator. It is worth pondering: what moats are left for the closed source providers?

Putting OSS into Applications

Berkeley Artificial Intelligence Research (BAIR) shared their thoughts on an imminent shift from single, monolithic models to compound AI systems: “state-of-the-art AI results are increasingly obtained by compound systems with multiple components, not just monolithic models.”

While BAIR’s article focuses on models built as AI systems – AlphaCode 2, AlphaGeometry, Medprompt combine fine-tuned LLMs with other components such as math engines, chain-of-thought examples, and database searches – the rise of autonomous agents can also be viewed through a “compound AI system” lens. After all, AI agents are built through multiple AI and software components that together form a truly autonomous system of execution.

Autonomous agents powered by ensembles of specialized open models will transform industries. Basic chatbots will evolve into versatile digital workers capable of operating with minimal human oversight. The ability to design and optimize these multi-model systems will be a critical competitive advantage, and with open source as the backbone, ROI calculations suddenly become a lot more feasible.

For instance, an autonomous agent for customer support might employ a suite of specialized models: one for understanding customer inquiries, another for retrieving relevant information, a third for generating responses, and a fourth for sentiment analysis to ensure appropriate tone. These many open source models would work together in a careful choreography, with the output of one serving as input for another, creating a seamless and efficient customer support experience – for a fraction of the cost one would see when building on an individual closed source model.

Source: Alessio Fanelli’s NVIDIA GTC Lightning Talk

The rise of agentic systems will have profound implications for enterprises:

Architecting for Autonomy: Enterprises will need to develop new architectures and design patterns to effectively harness the power of compound AI systems and enable truly autonomous agents.

Orchestration and Optimization: As the number and complexity of models in a compound AI system grow, efficient orchestration and optimization of these models will become critical to performance and cost management.

Explainability and Trust: Autonomous agents based on compound AI systems will require novel approaches to explainability and trust-building, as the decision-making process will be distributed across multiple models.

Open source AI models like Llama 3 will play a pivotal role in the development of compound AI systems and autonomous agents. The accessibility, customizability, and cost-effectiveness of these models will lower the barriers to entry and accelerate innovation in this space.

Adapting Your Enterprise AI Strategy

The rise of open source AI necessitates a strategic reorientation. To stay ahead of the curve, executives should:

Evaluate open source alternatives: Benchmark open models against proprietary APIs to identify the most cost-effective solutions.

Invest in model engineering talent: Build teams skilled in composing and customizing open source models for your specific use cases.

Double down on proprietary data: In the era of model commoditization, bespoke data will be your ultimate competitive advantage.

Experiment with autonomous agents: Start exploring use cases and architectures for multi-model autonomous systems.

Rebalance your inference spend: Analyze your hosted inference costs and explore opportunities to bring workloads in-house with open models.

Embracing the Open Source AI Paradigm Shift

Open source AI presents both opportunities and challenges. Enterprises must still invest in data, infrastructure, and governance. But the potential benefits are immense. Open source AI will be the foundation of the next wave of intelligent applications, just as open source software was for the web.

Llama 3 signals the dawn of a new era in AI. Enterprises that embrace this shift and learn to harness the power of open models will thrive. Those that cling to the walled gardens of proprietary solutions risk being left behind. The AI landscape has been transformed – and the future belongs to the open.