The Week AI Broke My Feed

No but seriously, could we have *one* day without a major announcement?

What a week! Between a new president, a promise to go to Mars, open source AI turned on its head courtesy of China / DeepSeek R1, a tease of a TikTok ban, Operator, ChatGPT Canvas, Citations, and a half a trillion infrastructure project for OpenAI, I’m not quite sure my eyes will ever recover from all the scrolling and eye popping I’ve been doing these past few days.

🤖 The Big AI Updates of the Week

Every day this week brought one or more meaningful AI updates that on their own would have been a Big Deal and together, boggles the mind.

Interestingly, each of the updates touches on some important “sub-questions” in the field of AI right now.

Stargate / $500B infra for AI

Insane, 2% of US GDP, a mandate from the government to build AI – going away from hyper regulatory scrutiny to >> let’s build, let’s win (the modern day Apollo?)

And also… lots of discussion around is the money real? How is this structured? What is actually going on?

DeepSeek-R1

Open source again overtaking closed source

China vs. United States in AI development

OpenAI Operator

Agents (the word of the year!)

On the technical level; Computer Use + Browser Access + Test-Time Compute

Anthropic Citations

AI credibility / hallucination concerns as the perennial thorn on the side of massive adoption

Humanity’s Last Exam

The benchmarks just keep on movin’!

🤿 Ok, diiiving in!

The Stargate Project

Well, that’s one way to fundraise!… $500B, to put in context, is ~2% of US GDP. Build baby build!

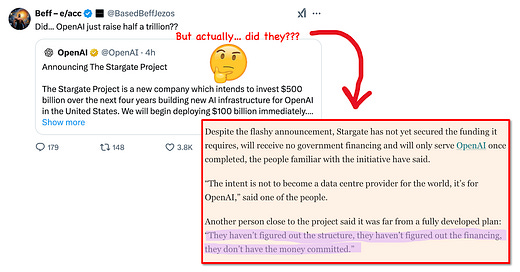

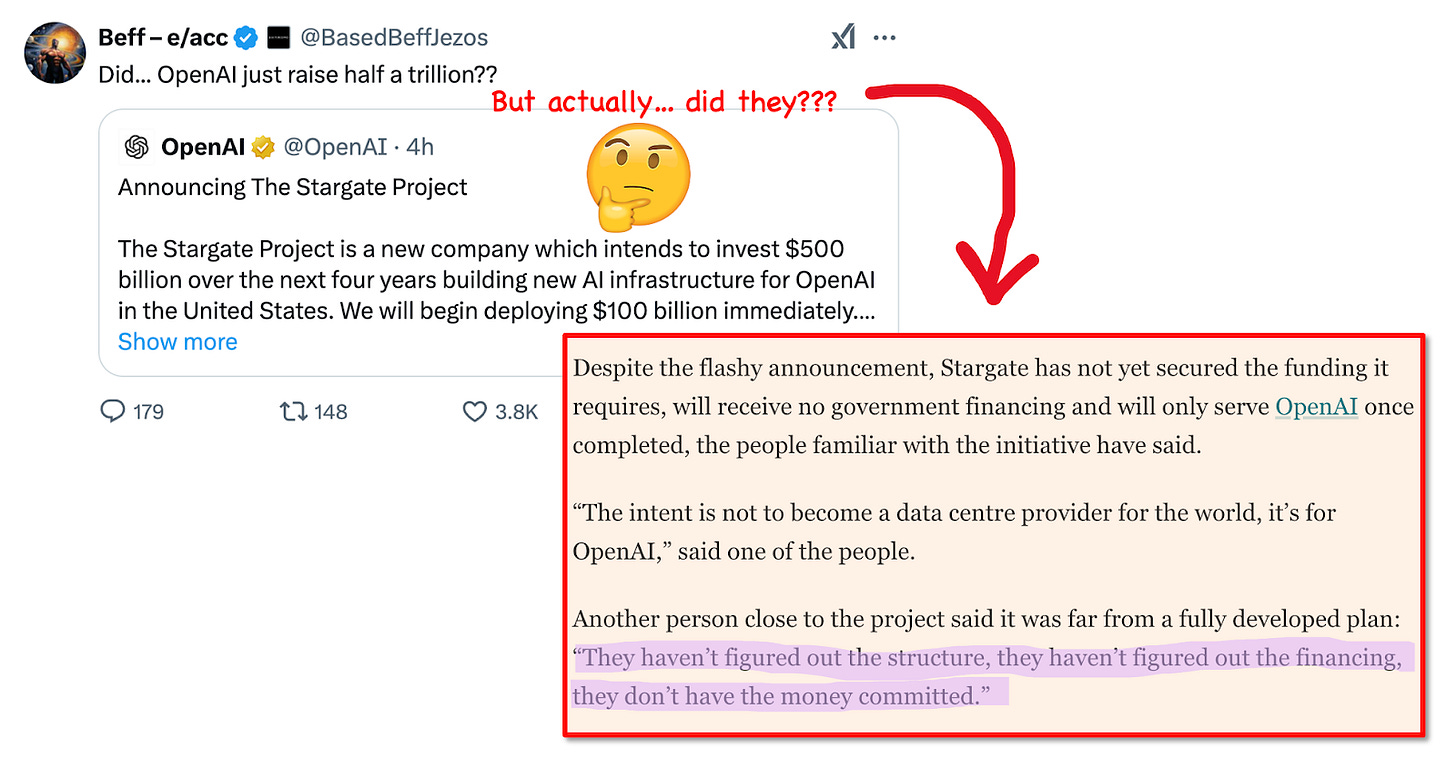

Except… it doesn’t seem the money is actually there? It’s been a puzzling few days. I haven’t lol’ed so much during the work day since Satya Nadella said “I’m good for my $80B” on live TV given the confusion.

In addition to the $$ question, the more interesting point on Stargate IMO is what is still not clear. Bill Gurley posted an excellent thread on this, with various open questions on Stargate (what is the corporate structure, who is CEO, is OpenAI the sole customer, how does Oracle play into this, is this actually a competitor to CoreWeave, and some other great points). FT answered some of those questions, sort of, with an anonymous zinger quote (pictured above, and reprinted here for emphasis):

“They haven’t figured out the structure, they haven’t figured out the financing, they don’t have the money committed.”

DeepSeek R1

Of all the news this week, the release of DeepSeek-R1 felt like the most 🤯 — my X feed was simultaneously “what a gift this is for open source” and “ruh roh China.”

Begs the question – will a Chinese AI lab beat Meta at its own game? Lots of rumormongering on X this week of a collective Meta gen AI org freakout.

On the meatier, point, though, there are two huge lessons here:

#1: We’re all so focused on data and compute, but R1 showed us that proper use of reinforcement learning can result in impactful scaling and emergent behavior.

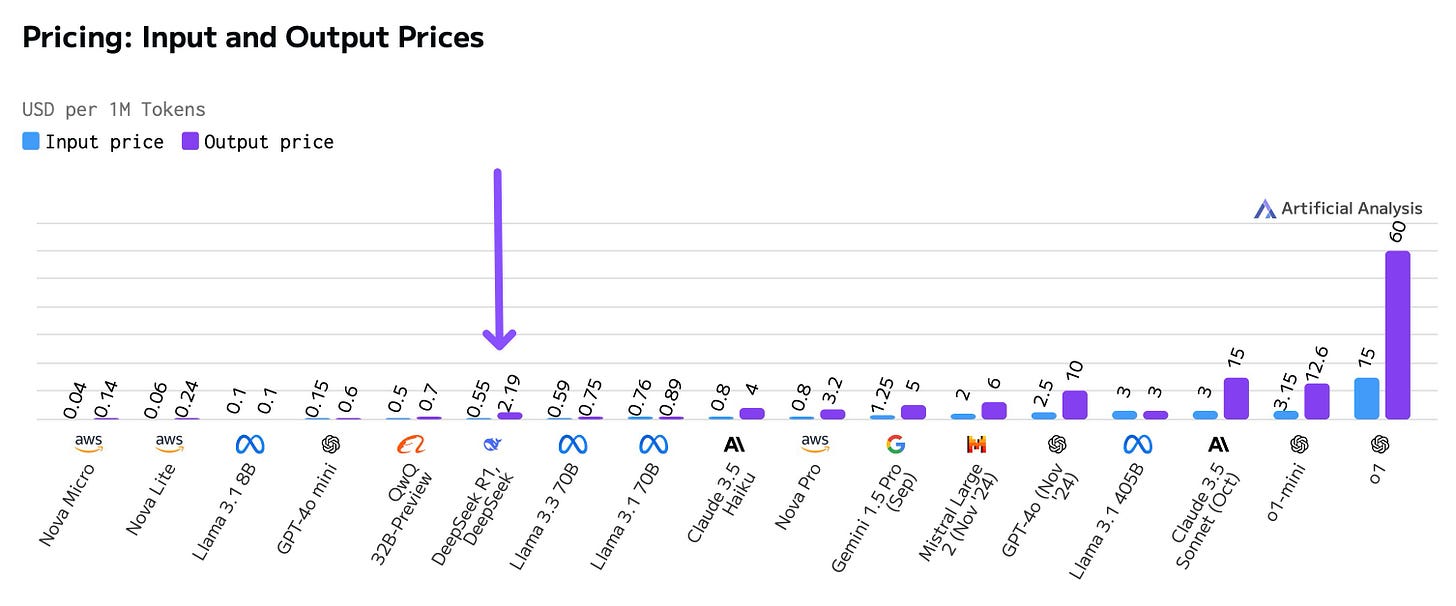

#2: The API version of DeepSeek R1 is 27x cheaper than O1, which means you get to slash your LLM bills. The BIG caveat is using the hosted API version means your data gets used for training purposes, i.e. sent straight to China.

Hard for a company to justify not at least trying to cut their AI costs, so what remains is whether the US will start regulating to whom you can share your data with. Can’t imagine the intelligence community would be thrilled if a bunch of American startups’ data was used to train the next frontier Chinese AI model.

OpenAI Operator

What struck me most about the Operator launch was how it felt like a step toward something bigger rather than the final form of an agent. The demo leaned more toward general-purpose computer use (human-in-the-loop, with broad searches and not much around stored preferences or specific datasets) than a deeply personalized, task-driven agent. The paper says as much, given it is quite focused on interface & browser navigation technology (and not much else):

“Powering Operator is Computer-Using Agent (CUA), a model that combines GPT-4o's vision capabilities with advanced reasoning through reinforcement learning. CUA is trained to interact with graphical user interfaces (GUIs)—the buttons, menus, and text fields people see on a screen—just as humans do.”

If nothing else, OpenAI’s demo reinforced just how impactful the browser/computer use case will be for AI. We’re likely underestimating how much this will reshape software market dynamics. Integration purgatory has stalled so much innovation, and LLMs alone haven’t solved it. But interface navigation? That feels like the key to unlocking it.

Lastly, another reminder of just how critical test-time compute is becoming:

Anthropic Citations

Not a lot of chatter on this yet, but it’s worth a quick dive given a key AI adoption trend. The biggest hurdle to enterprise AI adoption remains reliability. Every F500 exec I speak with is eager to deploy AI for cost savings but hesitant to trust it in high-stakes areas where errors carry real consequences.

Anthropic’s Citations feature focuses on user-provided documents, which makes it great for internal use cases but not for open-ended research—it doesn’t offer web search (yet). OpenAI’s GPT-4 with browsing technically supports citations, but… let’s just say it’s hit-or-miss. Every session I’ve had ends with me yelling at ChatGPT for making things up (future-conscious ChatGPT, if you’re reading this, I’m sorry! 😇). We still have a long way to go on reliability, but accurate sourcing = the necessary step forward!

Humanity’s Last Exam

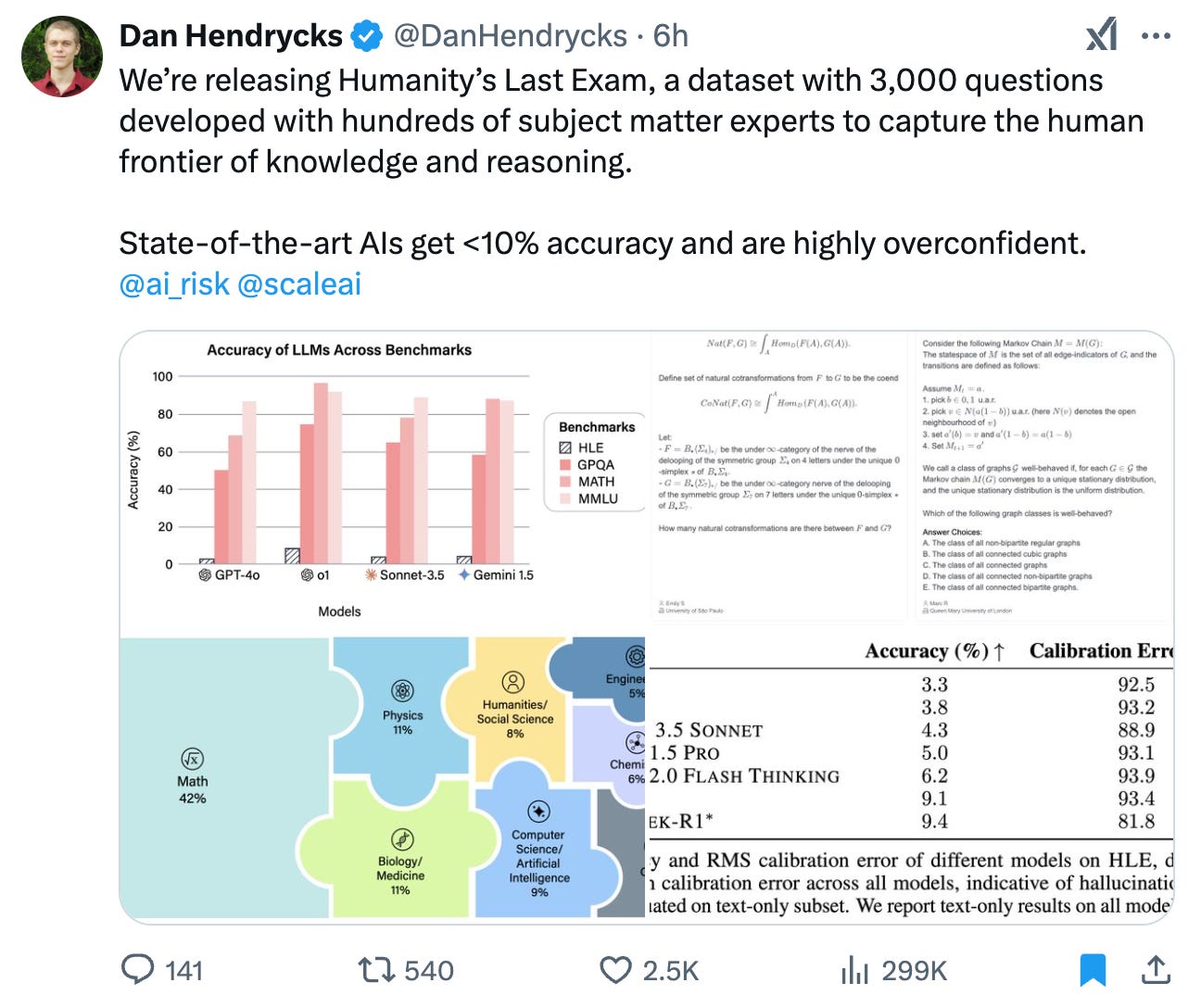

Last but not least, everyone’s favorite topic: BENCHMARKS! Dan Hendrycks (Center of AI Safety) and Scale AI collaborated to create “Humanity’s Last Exam,” a 3,000 question test for AI.

I couldn’t help but chuckle when I saw this—because every time AI clears one bar, we just go ahead and raise it. FWIW I actually think this is a fascinating project. But it’s hard not to notice how benchmarks tend to reflect where we think intelligence lies, only to be rewritten once models catch up. 🙃🙃

Of course, we have benchmarks for a reason. You can’t control what you don’t understand or cannot measure. So thus I’ll leave you with the very reassuring parting words of an OpenAI safety researcher. 😅