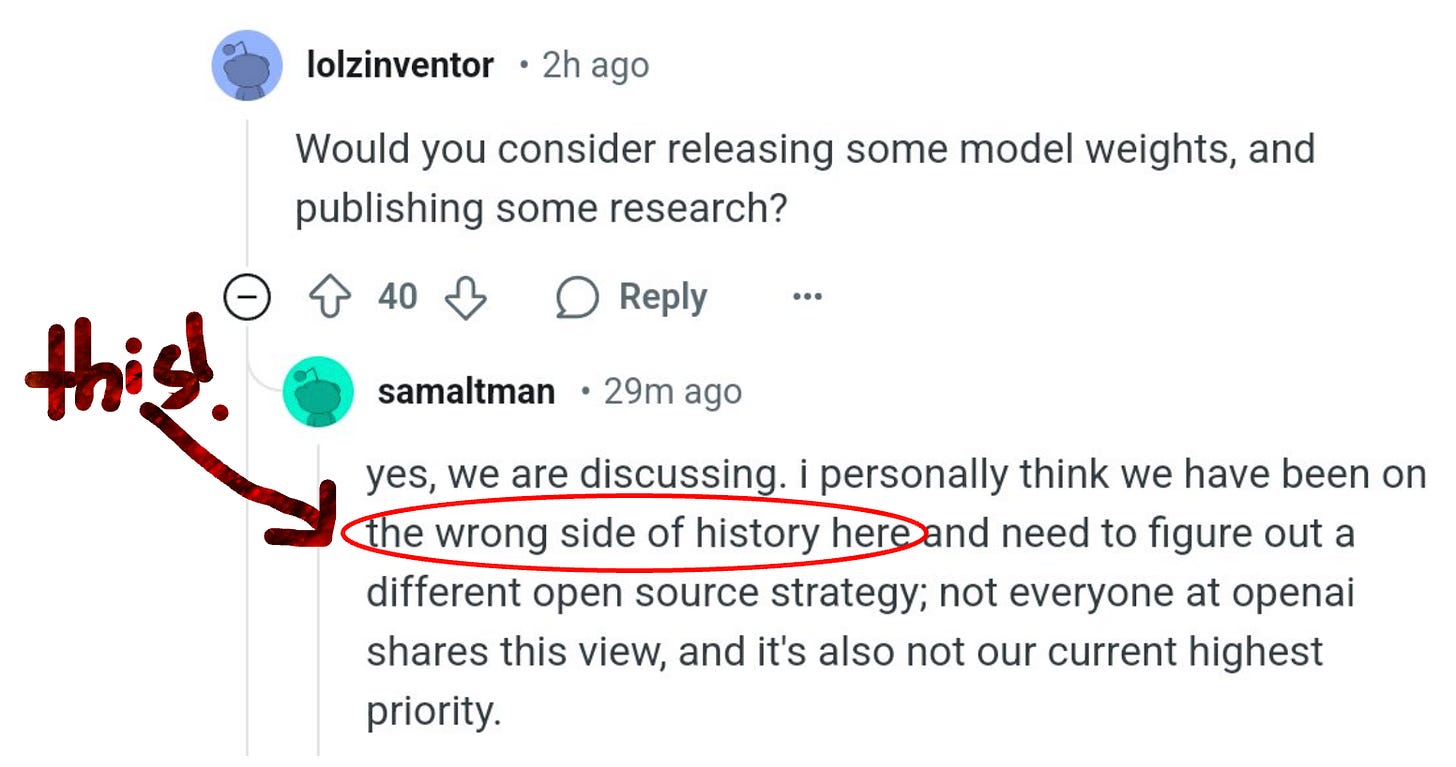

I was tempted to begin this blog post by throwing in what would likely have been the ten thousandth take on DeepSeek (ah but don’t worry, I still will). But when I reflected on everything that happened this week, one comment during Sam Altman’s AMA is what I imagine will continue to haunt me: “The wrong side of history”

Lost in the discourse this week – which was again dominated by DeepSeek and its far-reaching impacts to the field of AI & the markets beyond – was the good that comes from truly open AI. To be sure, many voices strongly articulated why the news was a huge net positive for the ecosystem (Clem Delangue’s feed is a good place to start for that), but it’s more than just open vs. closed source in the literal sense. The most thought-provoking article I read all week was Benn Stancil’s, who reflected on how we are likely way underestimating AI’s impact on the world by thinking too literally. He says:

Some piece of me read that section and bristled – I am not an AI doomerist, and resist any notion that can lead to society fighting the progress of technology.

And yet. There is a deep truth that rings in it too. We are a different species today in how we organize as a society than we were even just a decade ago. And I can’t help but think that continuing to support open AI – models that are not black boxes, models that show us their chain of thought and allow us, as best we can, to understand – is not just good for us, the technology system, but also Good, capital G, period.

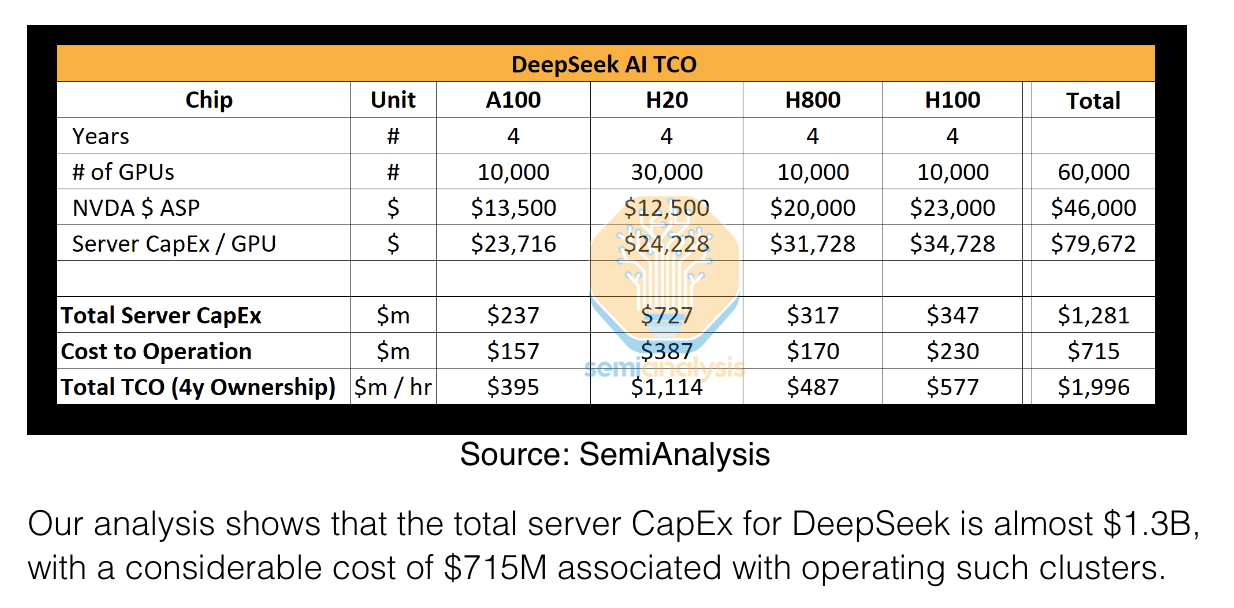

In turn, there was a strange dilemma in the air last week. On the one hand, we should very much view the DeepSeek researchers as friends, who have given us a true open-source gift in the form of R1, as well as their previous models. But is being pro-R1 and pro DeepSeek’s innovations in some ways anti-US? I don’t know if I have a clear answer. There’s a lot about DeepSeek that makes me nervous, that should probably make you nervous. Never mind the fact that its release imploded about one trillion (!) in market cap in one fell swoop and we cannot know the motivation behind High-Flyer (the hedge fund behind it), let alone the country it hails from. It’s that the consumer app that rose to #1 in the app stores and the data collection clearly will go straight to a country we are slowly entering a cold war with. It’s that in some ways, the amount spent is a bit besides the point:

I’ll keep supporting open-source innovation regardless of where it originates. However, it would be great to see American labs step up and give our ecosystem a helping hand, too. Mark Zuckerberg, through Meta, is carrying a lot of the United States’ open source AI on his back and coffers – announcing another $65B in investment for this year alone. In light of R1, those Llamas must be feeling lonely. So I’m glad to see Sam Altman, and by extension, OpenAI, reflecting and reconsidering what is the right side of history.

Some more scattered thoughts and AI announcements that piqued my interest this week:

Did R1 really only cost $6M?

In short: no. The team at SemiAnalysis wrote an excellent deep dive and it’s worth reading it in full.

The Week You Got Sick of Hearing About Jevon’s Paradox

Competitors

The word “cope” seemed to be trending on X. There was a lot of truth in the pushback (no, R1 didn’t cost $6M to train), but the responses felt… rather defensive.

Anthropic’s response, via Dario, was essentially that we should have even tighter chip controls.

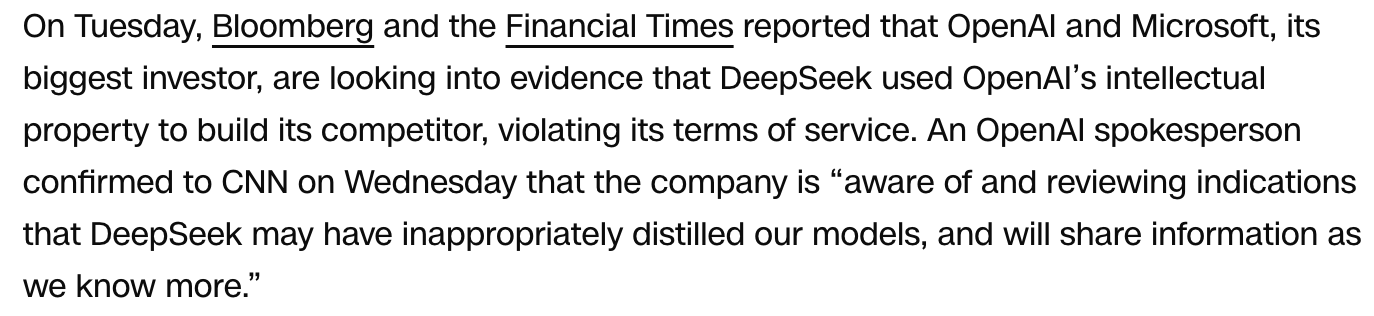

Meanwhile, OpenAI essentially claimed that DeepSeek had used their work to train R1.

True or not, I laughed when I saw this meme (remember all the copyright lawsuits OpenAI is facing?):

Speaking of copyright….

Buried in all the new model releases and continued excitement (and equal parts concern) over DeepSeek was the release of official guidance on AI copyright laws – which can be summed up by:

Humans Authorship: The Copyright Office makes it clear that only humans can be authors. If an AI independently generates your content, it won’t qualify for protection.

Need for Transparency: When you register a work, you need to identify which parts—if any—were generated by AI.

AI as a Creative Tool: If AI is used as a tool under a human’s direction, the human contributions can still be copyrighted. (I.e. heavy prompting/guiding/editing/human in the loop). However, material produced solely by AI—without human authorship—is not eligible.

Reinforcement Learning & Move 37

Worth remembering that part of the power of R1 is due to reinforcement learning. Karpathy posted about the concept of a “Move 37” (novel breakthrough discovered by the model through large-scale RL); the full thread merits reading, here is a snip:

MOAAAR RELEASES

Lest you think the rest of the world stopped for R1, rest assured, it did not. A few notable releases:

O3-mini!

OpenAI released o3, and surprise, o3-mini is free to use! The Latent Space folks wrote an excellent analysis on why o3-mini had to be free, and what’s going on with the price of intelligence.

Janus

Not content with all the fanfare with their language & reasoning model R1, DeepSeek also released Janus, a multimodal open-source model.

Qwen2.50-Max

Alilaba released Qwen2.5-Max, which outperforms DeepSeek V3 in some benchmarks. It’s a high-performance, efficient Mixture-of-Experts language model (to learn more, I included a great MoE overview I read this week at the end of this blog post).

Goose

Jack Dorsey, through Block, released Goose – an open source on-machine agent that seems in direct competition with OpenAI’s Operator

Technical deep-dive of the week

As promised — MoE architecture keeps popping up; so do yourself a favor and read Cameron Wolfe’s excellent overview of MoE.

Lastly, on the lighter side, this week had some of the funniest memes I’ve seen on AI twitter. I’ll leave you with my two favorites: