Welcome to the Age of the Agent Wars

OpenAI, Gemini, Manus, and the Battle for AI’s App Layer. (Oh, and the market’s rough)

Errrr, I’d just rather not address the stock market. I know it was a Big Deal this week. I also felt sadness when I looked at my portfolio. But you didn’t come here to be sad did you? You came here to be happy. AI knowledge = happiness, everyone knows. So, the above is a nice funny tweet for us all to laugh together, and now, we are going to let those red squares go.

(perfect transition in 3, 2, …)

The agent wars are heating up. With OpenAI’s latest agent-builder release (Responses API and Agents SDK, previously known as Swarm), we're seeing an acceleration in the app layer of AI—shifting the conversation from foundational models to the practical tools needed to actually build useful agents. In a landscape that's already cluttered with tools promising "agentic capabilities," OpenAI’s latest move either simplifies the field or tightens their grip on the developer ecosystem, depending on who you ask.

OpenAI's Agents Platform: Simplicity or Lock-in?

The OpenAI ecosystem grows tighter. The new suite is an all-in-one developer toolkit – featuring integrated web search, file retrieval, and computer interactions – is aiming squarely at simplifying AI agent-building for developers. It’s a classic walled-garden move. For many startups, this integrated simplicity is a dream; why juggle 10 tools when you can have one seamless OpenAI-powered experience? Tool sprawl is the worst. At the same time, I’m seeing an increasing number of startups saying they are switching to Gemini due to costs, and all of these releases from OpenAI are really meant to lock developers into their proprietary ecosystem.

Simplicity or freedom? Pick your poison!

The Latent Space folks published an awesome deep dive into this latest release, and for those who prefer consuming their information via podcasts, check out their episode here. The quick highlights:

Responses API: Streamlined endpoint combining Assistants API features with chat completions, supporting complex tasks, tool chaining, and simplified state management.

Web Search Tool: Real-time retrieval with precise inline citations, powered by specialized fine-tuned models (gpt-4o-search-preview and gpt-4o-mini-search-preview), ideal for accurate answers using live data.

Computer Use Tool (CUA): I.e. a built-in Operator. Enables robust software interaction and browser automation, performing strongly on benchmarks (38.1% OSWorld, 58.1% WebArena, 87% WebVoyager).

File Search Tool: Integrates document retrieval and RAG for efficient handling of structured and unstructured data.

Agents SDK: Official successor to Swarm, offering built-in observability, intelligent agent handoffs, input/output validation, and improved tracing, simplifying multi-agent workflows.

Google's Gemma 3: Compact, Efficient, and Accessible

Google introduced Gemma 3, positioning it as the most capable model you can run efficiently on a single GPU or TPU (whoa). Built upon the architecture of Gemini 2.0, Gemma 3 is designed specifically with accessibility in mind, allowing broader developer adoption by minimizing hardware requirements. This lightweight approach means Gemma 3 is versatile enough for developers to deploy easily across various applications, particularly attractive for those wary of OpenAI’s tighter integration and escalating costs. It’s also multimodal, with long contexts, and >140 languages supported to boot. For further reading: Simon Willison wrote a great in-depth technical review.

But wait, there’s more! As AI News by Smol AI (a great resource to follow to be on top of all things AI) succinctly put it:

“It's not every day that @GoogleDeepMind beats both DeepSeek (with Gemma 3) and OpenAI (with 2.0 Flash Native Image Gen)”

Manus: China's Answer to Operator

The real wildcard this week was Manus, a general-purpose AI agent launched by Monica, a company based in Wuhan, China. Think of it as a competitor to OpenAI’s Operator.

The product has gone viral, partly thanks to a high-publicity, invite-only launch; partly because AI twitter loves the ~~new hot thing~~ and Manus was definitely it this week. I lol’ed when I saw the post below, it’s just so real:

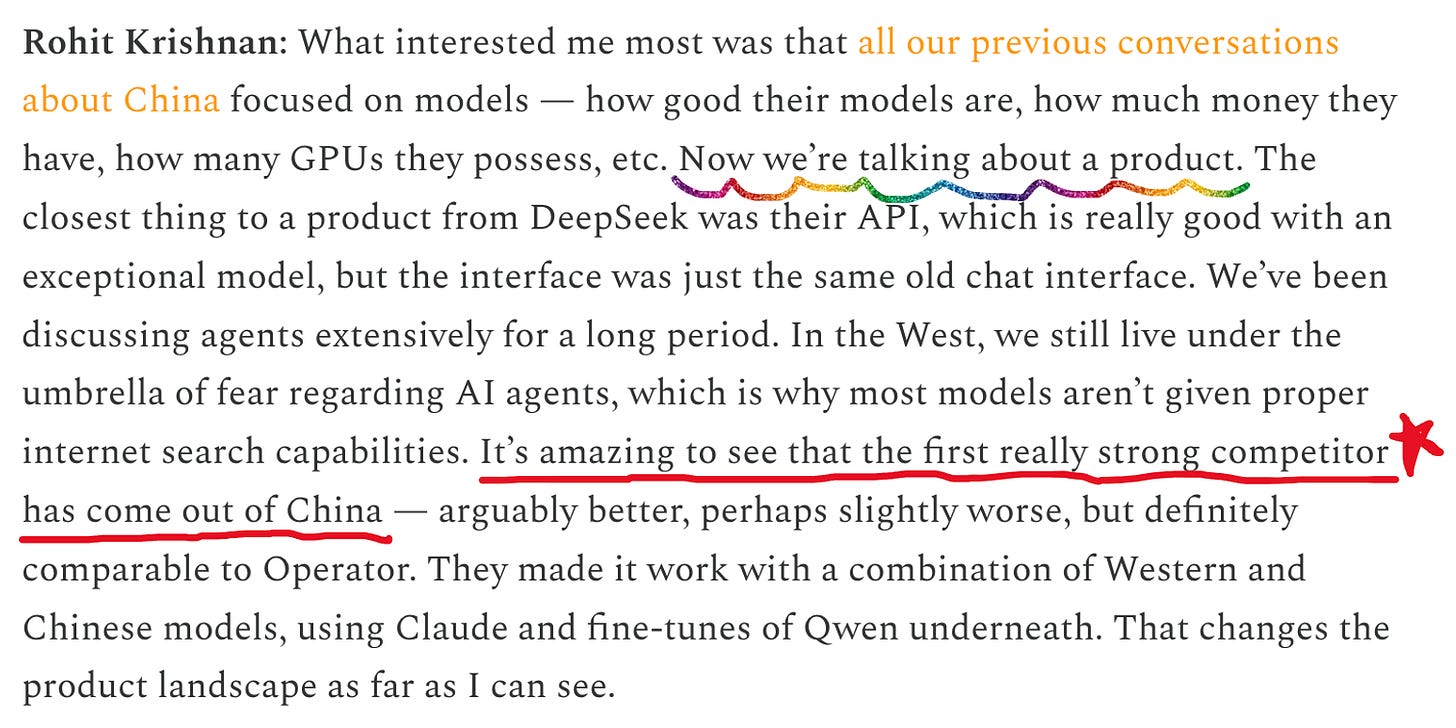

Why was the reaction so strong? Perhaps because it felt like a “DeepSeek moment.” In an interview with ChinaTalk, Rohit Krishnan (writer of the very popular Strange Loop Canon) had this to say:

The launch was not without controversy, however. (When is it ever?)

A potentially significant security concern was surfaced when a user demonstrated that the agent openly provided access to its own runtime sandbox files, inadvertently exposing that Manus might be running on Anthropic’s Claude models—raising serious questions about data security and compliance, particularly within China.

Monica's founder Peak confirmed that Manus uses multiple models tailored to different tasks but had a pretty robust overview of what is going on in the backend with the sandboxes, addressed open source use, and more.

Regardless of how novel the tech may or may not be, whether it’s just a wrapper on (insert your favorite: Claude, Browser Use, etc), it remains that Manus is a pretty neat and useful product, and a pretty decent competitor to Operator.

Unsurprisingly, OpenAI does not like this development. They do not like it at all. In fact, it’d like to humbly request that we simply ban anything coming out of China. ¯\_(ツ)_/¯

I’m actually super rah rah go America 🇺🇸🇺🇸, and so don’t empirically disagree with the fear here, but the naked attempt to block competition this way… not my cup of tea.

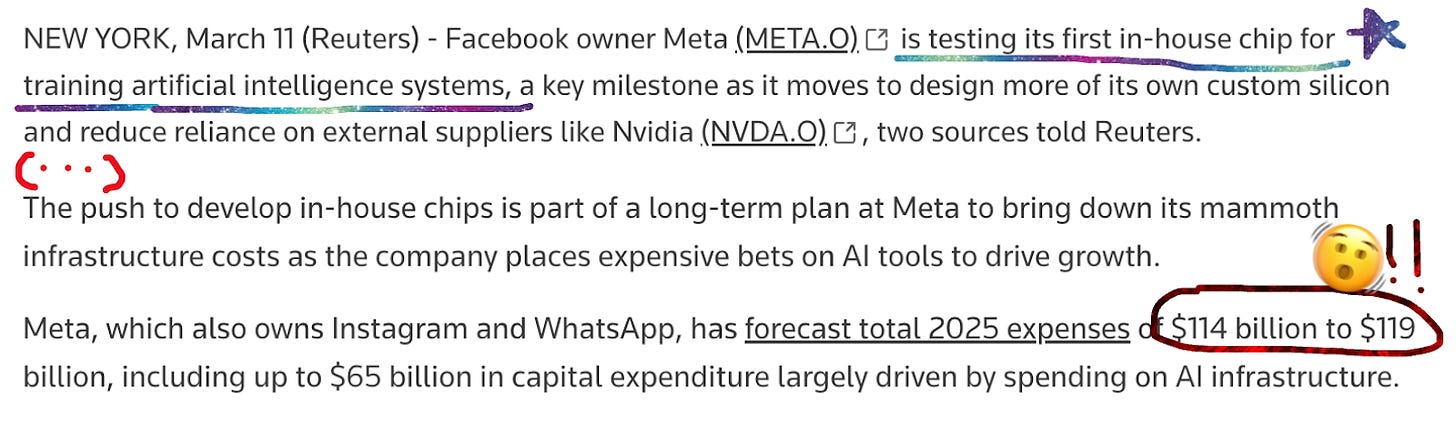

Meta Enters the (Hardware) Chat

Just a few weeks ago, reports surfaced that OpenAI was finalizing its first custom chip design and soon to send it to TSMC for taping out & fab. Now it seems yet another player – AI darling Meta – wants to diversify away from over-reliance on NVIDIA. This week’s report is that Meta is testing its first in-house AI training chip.

This vertical integration push underscores a few broader trends in the world of LLMs:

The more AI increases in importance, the less sensible it seems to be over-reliant on a single monopolistic player (i.e. NVIDIA). Companies end up totally beholden to their supply, newest innovation, etc.

Increasing competition at the hardware layer. I think end of 2020s and 2030s we will see meaningful competition arise against NVIDIA. Either because the largest customers want some diversification and build their own (a la OpenAI & Meta), or because the pie still seems really large and some new hardware entrants will make waves (looking at you, Tenstorrent!).

CAPEX spend is real. One way to deal with the rising costs is, well, to cut them! AI isn’t going anywhere, so how can we make it cheaper to build? Seems like one version of the answer may be custom hardware. As everyone races toward cheaper, better AI infrastructure, expect more aggressive moves from big tech.

This is not without precedent. Google has already seen much success with its TPUs (Gemini 2.0 was trained with their Trillium TPU). Given the insane level of innovation they’ve brought to open source & thus the entirety foundation model ecosystem with their Llama series of models, I think we can expect great things here. So I, for one, am excited to see what Meta is cooking.

PDFs are the only AGI I need

At Decibel we like to joke that in the end, all users really want from an AI application is a nice PDF. So it was nice to see PDF get some foundation model lab love! Mistral just released Mistral OCR, which they are hailing as “the world’s best document understanding API.” Tbh that is just a fancy way of saying “we read PDFs” but like, really well!

Joke’s aside, this is actually really useful. They note this in the launch blog, but an estimated >85% of the world’s organizational data is stored as documents, which are mostly PDFs. Better comprehension of all elements (text, images, charts, math etc) will be super useful for pretty much every AI application. I’m here for it. Go Mistral!

🐅🐅🐅🇨🇳Six Tigers 🇨🇳🐅🐅🐅

You may have seen Manus come out this week, and thought to yourself, “wow I hadn’t heard of this startup Monica. And also wow DeepSeek took me by surprise. What am I missing re: China? What should I know? Who is coming up?”

Look no further! I read a great overview this week of the Chinese AI startups to watch out for. The post in full is worth reading, but the tl;dr from the post is that “A group of artificial intelligence (AI) start-ups, known as the Six Tigers, are emerging as China’s best hopes for reaching the frontier of ChatGPT-like technology.” These startups are listed below, and an in-depth overview is in the linked blog.

01.AI 零一万物

Baichuan Intelligence 百川智能

Zhipu AI 智谱AI

StepFun 阶跃星辰

MiniMax

Moonshot 月之暗面

They’ll be names to watch out for!

Parting thoughts

In AI, one day you're in, the next you're out.