When Infrastructure Eats Application, and Legal Eats Us All

deeper shifts in how we build, deploy (and sue) intelligence

Four forces converging this week:

Context curation over raw prompting: The focus is shifting from prompt engineering to context engineering - systematically managing what information reaches the model and how it's structured.

Post-training > pre-training: Reinforcement-learning loops, synthetic feedback, and tool calls are mattering more than raw scale and crawl data.

Infrastructure goes vertical: Companies that own the underlying pipes are rapidly moving up-stack to capture more value, from voice assistants to app engines.

The legal reckoning arrives. Training on copyrighted material is fair use, but obtaining that material illegally isn't – and the bills are starting to come due.

Let’s dig into the launches – and the legal curveballs chasing them!

Context Engineering is the new “it” term

Permit me a walk down memory lane. Two years ago (a whole epoch in the killifish-speed AI time) the Twitter meme cycle went: “omg vector DBs”, “omg agents” (meaning LangChain-style frameworks, not end-user apps), then “omg RAG.”

Now, context engineering is taking the baton.

The very first note I can find about context engineering dates back to April from Ankur Goyal (Braintrust), explaining how RAG is just one flavor of context engineering, and defining the term as “the task of bringing the right information (in the right format) to the LLM.”

Then these past 2 weeks, an avalanche of context engineering:

Cognition published Walden Yan’s “Don’t Build Multi-Agents” (June 12), arguing that the hard part of production agents is automatically curating and maintaining the right context.

Tobi Lütke weighed in on June 18: “Context engineering” over “prompt engineering” as a label for the real craft.

Harrison Chase (LangChain) followed with a June 23 thread and companion blog post breaking down practical patterns for context engineering in agent frameworks.

Karpathy nails (as usual) both an explanation for context engineering AND putting a fine point on why building great LLM applications is still very very hard:

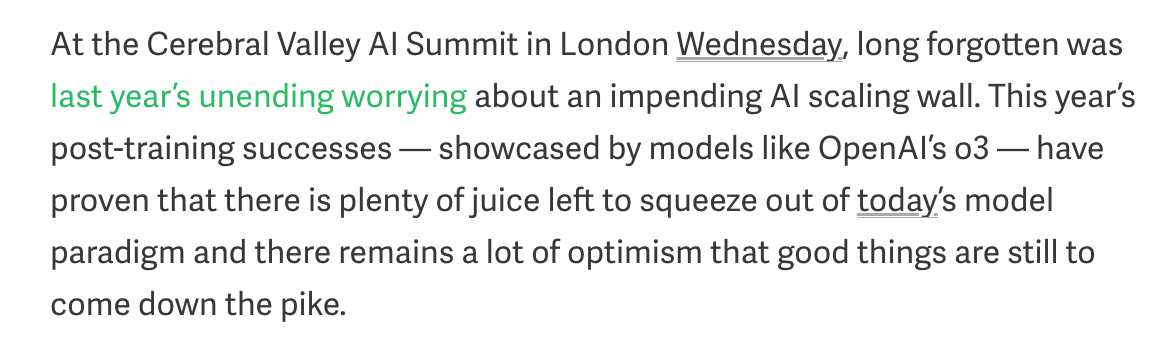

Buzzword aside, I think this is a critical thing to understand. AI infrastructure as a category is maturing because now we have a much better idea of what we are building, and how to make it great. Importantly, the underlying foundation models are undergoing a shift; whereas everything used to be about pre-training, and in 2024 many people were worried that we had hit a scaling wall, the rise of reasoning models – and thus the push to post-training – has brought new light to what the future state looks like for AI engineering. From the CVAI Summit this week:

We’ve cycled from 2023’s hype around raw prompting, to the RAG-everywhere phase, and now with reasoning models to post-training techniques. The spotlight is shifting away from ever-larger pre-train data toward reinforcement-learning-driven refinement, tool-augmented reasoning, and long-horizon memory. Talent acquihire notwithstanding, Meta’s $14.8B investment in Scale AI signals exactly that pivot: better post-training data and feedback loops, not just bigger corpora.

But the models alone are still not enough; systems needs to be dynamic. As the LangChain post notes, “LLMs cannot read minds - you need to give them the right information. Garbage in, garbage out.”

Everything’s context!

Google’s Dev Upgrades + owning the workflow

While Apple is completely asleep at the wheel, its fellow FAANG is definitely not!!! Google is shiiiiipping. This week we saw Gemini CLI, Imagen 4, and the new Gemma 3n all come out. The CLI brings Gemini 2.5 Pro straight into the terminal with a generous free quota (60 req/min, 1,000/day) and full Apache-2.0 code. Imagen 4 joins the Gemini API at $0.04 per image (Ultra at $0.06) with far cleaner text rendering, while Gemma 3n squeezes a 5-8 B-param multimodal model onto 2-3 GB of RAM for on-device inference. The net effect is that Google is tightening its grip on every stage of the dev workflow – from shell, to notebook, to edge hardware.

Infra goes vertical—fast

The middle of the stack wants a bigger slice of the pie, so it’s marching up to the UI:

ElevenLabs launches 11.ai. A personal, low‑latency voice assistant that rides ElevenLabs’ own speech infra but layers MCP integrations with Perplexity, Linear, Slack, and Notion to run multi‑step workflows. Lesson: when you own the pipes, shipping the faucet is cheap.

OpenAI prototypes a collaboration suite. With the Connectors launch, and rumored collaboration features, ChatGPT may be morphing into a multiplayer doc editor with native storage – squarely in the territory of Office 365 and Google Workspace. Connectors are still in beta and multiuser features are still a rumor; but if they go mainstream, every productivity vendor built on OpenAI’s APIs wakes up to a new, very large competitor.

Anthropic ships Claude Artifacts. Claude’s new dedicated space for artifacts turn a chat prompt into a hosted mini‑app you can share with a link. Claude scaffolds UI + logic, runs it inside the chat, and bills usage to each viewer’s own Claude account—so creators dodge API costs and deployment hassles. External APIs and storage aren’t live yet, but this first cut already shifts Claude from assistant to lightweight no‑code platform.

The economics line up. As the Nextword post on AI infra verticalization argues: infra margins compress while product margins expand; build cost is near zero; and telemetry gives infra providers an unfair jump‑start. The endgame is obvious – own more of the workflow, capture more of the value.

⚖️ The “Call your lawyers” Mega Section ⚖️

Copyright Gets Real (Expensive) 💸

Remember when everyone thought AI training was the Wild West? Well, the sheriff just rode into town, and his name is Judge William Alsup.

In a landmark ruling that has every AI company speed-dialing their legal teams, here's what went down:

The Good News: Training AI on copyrighted material = fair use! 🎉

The Bad News: Stealing that material first = still very illegal! 🚨

Anthropic just learned this the hard way. The judge basically said "Look, teaching your AI to write by showing it books is cool. But downloading those books from Library Genesis? That's gonna cost you."

And by "cost you," we're talking potentially billions. With a B. As in "Better start that Series Z fundraise now."

The implications are massive:

Image generators like Midjourney (currently being sued by Disney) are probably sweating bullets

OpenAI's upcoming court date with the NYT just got a lot more interesting

Every AI startup just added "content licensing budget" to their burn rate

The days of "download first, ask permission never" are officially over. RIP to the cowboy era of AI training.

iYo vs io

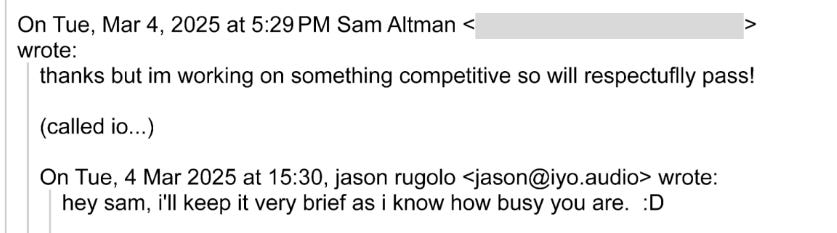

Back at home one of my favorite sayings is “rir pra não chorar” (which means we laugh so we don’t cry). It feels very apropos here. I half thought it was a joke when I read that there was a company called iyO that is claiming io is a ripoff name after pitching OpenAI. (Also remember when OpenAI officially announced “io” during Google I/O?) I mean, someone get these people a naming consultant. You’ve got billions of dollars. What is going on!! 😂

Anyways, tough for Jason Rugolo from iyO, because Sam kept receipts:

To me the real kicker here is that… none of these devices even exist yet! OpenAI has said their device won't even be available until at least 2026. So we're essentially watching a legal battle over naming vaporware. What a time to be alive!

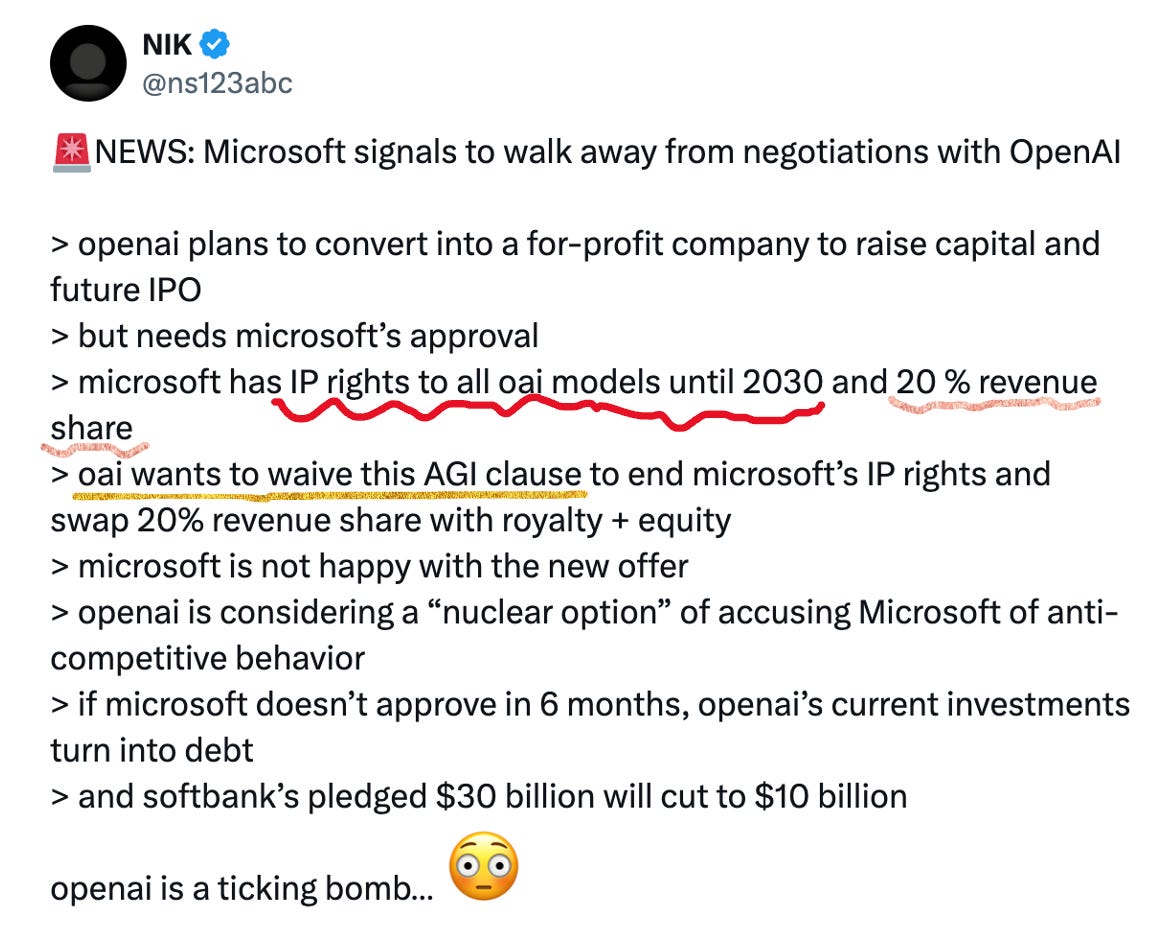

Microsoft vs. OpenAI legal drama continues

Are you tired of the legal section yet? Because there’s more!

I shared a lot about this already, so for a full recap revisit last week’s blog post. The continuation this week is around the AGI clause. Here’s a great recap of the AGI issue, from The Information:

What This Means for the Rest of Us 🎯

Watching this week's developments, three things are crystal clear:

1. Know Your Data: Anthropic lawsuit makes it clear: data provenance, licensing verification, and audit trails aren't nice-to-haves anymore - they're core infrastructure.

2. No One Stays in Their Lane Anymore: Your infrastructure provider today might be your competitor tomorrow. Your partner might become your platform. Plan accordingly.

3. Context is Everything: Users don’t care whether you fine-tuned, RAG-ified, or tool-called – they care that answers arrive instantly and feel tailored. Products that own the context pipeline (memory, retrieval, and action hooks) will outpace those chasing raw model quality alone.

It’s a busy, crazy, wonderful time! And in the meantime, remember, NAMES DO MATTER: