Checkmate, GPT

Post-GPT-5 positioning, more talent raids, and a whole lotta drama

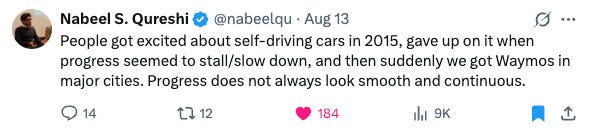

In chess, position matters, and nowhere was that clearer than in all the drama this week. The common thread: distribution and culture are doing as much work as raw model quality. GPT-5 ruffled some feathers and had to fold to the userbase, Microsoft is selling “we’re the startup now,” Anthropic doubled down on culture over comp theatrics, Elon and Sam took their rivalry from a nasty X spat to an AI-vs-AI chessboard (a reminder that attention is its own distribution!), Perplexity YOLO'd an offer buy Google Chrome, and Apple is apparently finally making moves… on a 2026–27 clock. 🙃 Let’s get into it!

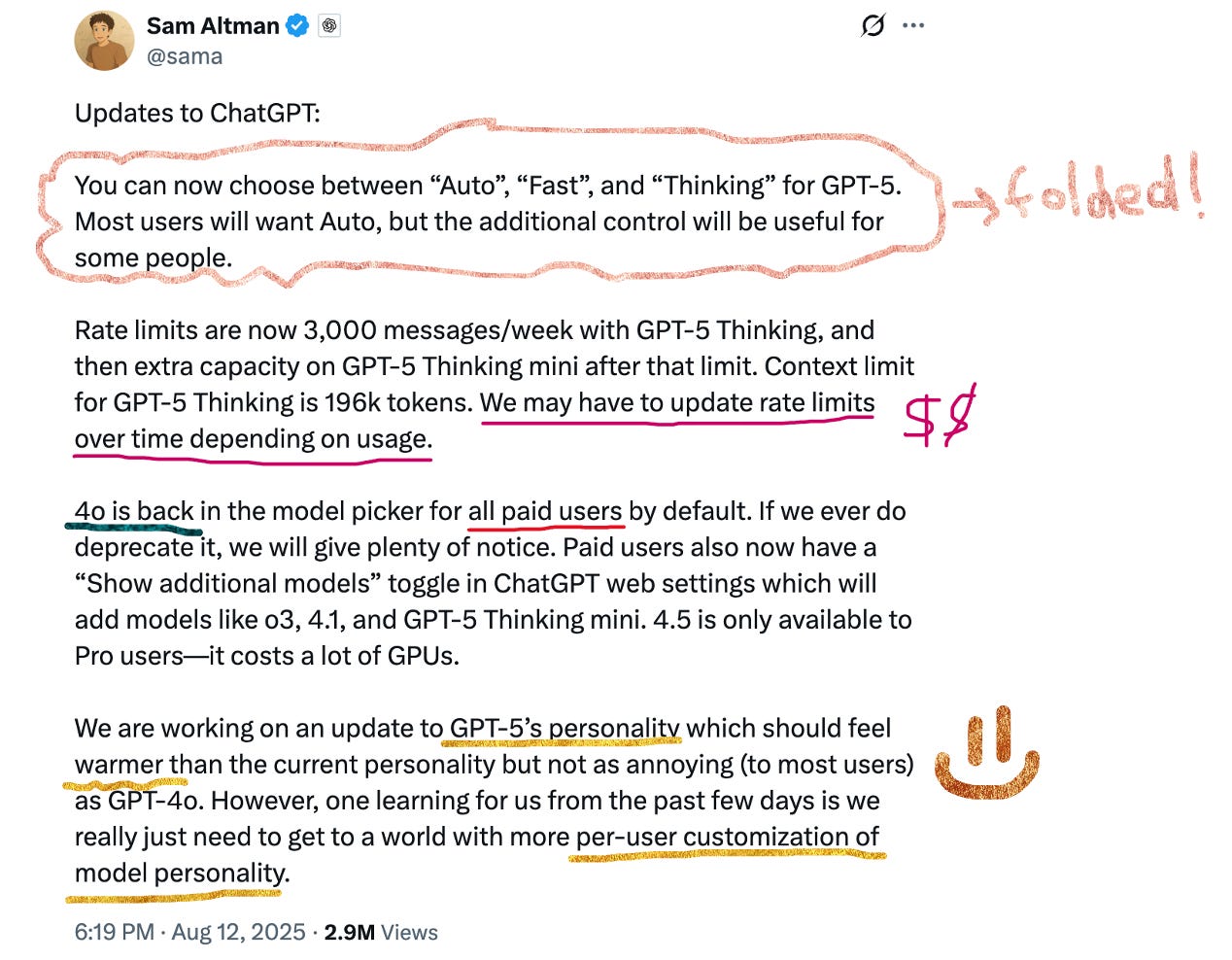

GPT-5: router era, product dust-ups, and a clear distribution play

It has been a bumpy post-release week for OpenAI! ICYMI, I did a deeper dive into both my (very!) strong feelings about the release, and the technical overview. As a quick refresher: OpenAI framed GPT-5 as a unified, faster-when-it-should-be / deeper-when-it-matters system. Pricing shifts look friendly on input, with some “invisible” reasoning tokens effectively counting as output – so devs, watch your bill. Meanwhile, the user backlash over sunsetting 4o (and “colder” vibes) forced a partial rollback and new personality controls.

What to make of this less-than-smooth rollout? I turn to some of my favorite newsletters for insight:

SemiAnalysis argues GPT-5 also sets up classic ad monetization mechanics (think “router” creates high-intent surfaces). Whether you buy the super-app thesis or not, expect more sponsored answers, lead-gen, and enterprise placement models to show up in the UI and API. Just look at this crazy website visits chart:

Alberto Romero from The Algorithmic Bridge asked the question that many of us couldn’t help but ask. The rollout was too messy, too convoluted, and it was odd that OpenAI folded waaaay too quickly to the pushback. Sooo…. was it somehow a clever ploy?

I mean, it’s kind of crazy if OpenAI staged a bait-and-switch to push 4o usage into paid tiers, so it’s probably not what happened and just a great coincidence. But wowza, the sequence sure functions like a live A/B test of willingness to pay and persona preference: remove 4o, watch public outcry, then restore it for Plus only while signaling legacy models won’t live forever (“we’ll watch usage”). The “grand plan” may be unlikely, but the outcome is sure the same: strongly revealed preference data and a nudge toward paid.

Lastly, for some great technical commentary on the release straight from the horse’s mouth, look no further than Latent Space’s excellent episode with Greg Brockman (co-founder & President of OpenAI). They cover everything from the evolution of reasoning at OpenAI, to pricing and compute efficiency improvements, to the value of engineers in the age of AGI. Worth the full listen!

💵 More Money Moves 💵

I can’t possibly keep count anymore! Meta is poaching OpenAI, Microsoft is poaching DeepMind, DeepMind’s probably poaching everyone, and at least Anthropic is hanging around happy apparently poach-proof!

OpenAI researchers go to Meta Superintelligence Labs

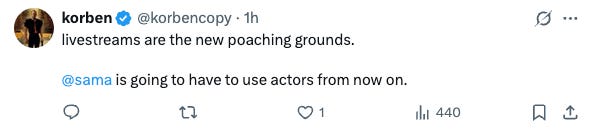

Lesson #1: Be careful who you put on livestreams!!!

You heard it right – the pipeline now is watch the livestream -> circle faces for poaching! Meta has sniped more OpenAI researchers, all three featured recently in OpenAI videos - oof!

Microsoft to DeepMind: “We’re the startup now”

I didn’t realize the Microsofties (their words, not mine!!!!) were entering the poaching wars! Apparently Mustafa Suleyman (DeepMind founder -> Infleqtion founder -> acquihire by MSFT) has been leading the charge, touting fewer layers, more shipping, and a mandate to move. And apparently, the pitch is resonating! Multiple reports count two dozen-plus ex-DeepMind hires decamping to Microsoft AI under Suleyman.

Okay you may be thinking – hold up Jess, why would a fancy schmancy researcher join Microsoft? Well, I was thinking about this too, because it doesn’t feel like Microsoft research is doing anything terribly exciting (sorry, but it’s true). And Microsoft can’t out-Meta Meta on comp every time. But perhaps…. it can credibly sell impact and distribution (Copilot, Windows, Azure, etc). And maybe DeepMind feels so deeply entrenched into Google that MSFT can sell the “startup vibe at a platform company” pitch. Maybe? Maybe!! I guess let’s see what they build! Would be fun to add another real player to the foundation model / superintelligence / AGI race. They sure have a lot of money. And a lot of cards holding OpenAI stock. And a lot of money!!!! Could be a real contender in the model palooza to come.

Anthropic’s talent edge without billion-dollar packages

And then finally, we have everyone’s golden child. SignalFire’s data and follow-on reporting say Anthropic is growing their engineering headcount faster than OpenAI, Meta, or Google – while refusing negotiation games that break internal fairness. Translation: mission and clear comp ladders are beating raw cash for many senior ICs and researchers.

Why it matters: Retention is a compounding advantage; it preserves tacit knowledge and reduces coordination drag. If Anthropic maintains momentum on product (Claude Code; hybrid reasoning) and keeps winning on culture, they’ll continue punching above their spend.

👊 Elon v. Sam: The Pettiest Timeline

Honestly… where to even start. I don’t even know why I’m writing about this since it’s all so lowbrow, but I guess I can’t take my eyes off?! I once wrote a post called “The Real CEOs of Silicon Valley” (yes, a nod to the real housewives, given all the free entertainment these dudes give us) about this feud and it’s only escalated and gotten nastier. This week we were “gifted” some more drama – accusations about X’s algorithm, antitrust barbs, and general mudslinging. It was not pretty.

Now, as I’ve written before, my side love (outside of AI, duh) is playing chess. So when chess meets AI and my interests collide, you bet I’m dialed in. So I was excited to follow the face-off of AI engines in chess! Kaggle hosted a chess exhibition tournament a week ago, where Elon and Sam (via o3 and xAI), had another venue to face off. Now, unlike AlphaZero, these engines are not specifically built for chess, but alas, it is still fun to watch them. The resulting fight went in Sam’s favor – OpenAI’s o3 swept xAI’s Grok 4 in the final, though Grok 4 had been “by far the strongest chess player” up until the semi finals, and then proceeded to blunder its queen (repeatedly!!! ouch) in its final games.

Next, per the tweet I screenshotted above, maybe a fight in the Colosseum. Just please leave my timeline, k thanks?!

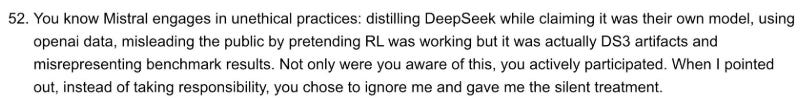

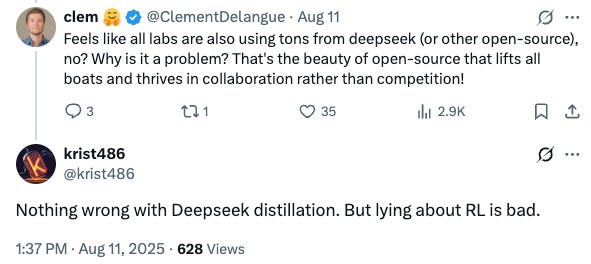

Rumor mill: Mistral distilled DeepSeek?

A viral X post based on a leaked email (?!) alleges internal misconduct at Mistral, including distilling DeepSeek models and misrepresenting results. These are unverified claims; treat as rumor until there’s primary evidence or reporting. Still, the reaction shows how sensitive this space is to provenance, licensing, and benchmark integrity.

The core accusation from the email re: distillation was:

I was trying to do some investigative work (i.e. reading more X posts, haha) to get to the bottom of this.

My understanding is that distillation is actually totally within’s DeepSeek v3’s license. So the issue isn’t actually distilling it, but about misleading RL claims.

But then something is still not adding up on the RL claim:

Anyways - anyone with more info, please do share! I’m quite curious, given Mistral is 1) a real contender in open source models 2) obviously quite well-funded.

Apple: Charismatic OS, a “Pixar lamp” robot, and a smarter Siri (on a 2026–27 timeline)

Apple might be alive in AI-land! Bloomberg says that Apple is focused on physical AI and plotting a smart-home push: a tabletop robot with a swiveling display, a smart speaker with a screen, security cameras, and a lifelike Siri running on a new multi-user OS dubbed Charismatic.

If the hardware lands, Apple can reframe assistants as shared household systems – not just single-user chatbots. That would be very cool! But the clock matters; 2026–27 is a long runway for Google/Amazon (and OpenAI-adjacent devices) to entrench. We shall seeeee!

Perplexity Offers to Buy Google Chrome

Perplexity offers $34B to buy Chrome. Shoot your shot, king! Do they have the money? Is it real? Is that the lowest-ball offer of all times? Who cares!!!! It’s AI szn! There’s no need for coherence or sanity!!!

This of course prompted many funny memes of other people offering to randomly buy companies / products totally outside their means:

💻🛠️ Okay, now to the actual fun news - technical releases and features!!! 🛠️ 💻

Claude adds opt-in memory + 1M context windows

Anthropic shipped an optional memory feature that can search and reference prior chats when you ask. It’s off by default and designed not to build a persistent user profile. For enterprises, this “pull-only recall” is easier to reason about for privacy and DSAR workflows than ambient, always-on memory.

The UX win is obvious (no more “remind me what we discussed last week”), but the real unlock is safer continuity for long-running projects with auditability.

Lastly on the Claude front, an announcement that needs no more explanation: 1M tokens of context in the API!!!!

Gemma 3 270M: a tiny, instruction-following model for on-device specialists

Google added Gemma 3 270M – a 270M-parameter model designed for task-specific fine-tuning with strong instruction-following out of the box. It ships with QAT/INT4 checkpoints, a 256k-token vocab (helpful for rare tokens), and measured 0.75% battery for 25 conversations on a Pixel 9 Pro in internal tests. It’s available via HF/Ollama/Kaggle/MLX and documented in the Gemma 3 releases page. This isn’t meant to be your general chat model for sure (see above from Simon for the cute poem instead of an SVG). What it is is a cheap, fast base for classifiers, extractors, routers, and other narrow agents you want to run on device or on tiny servers.

Why it matters: The “fleet of small experts” pattern keeps getting more attractive – lower latency, real privacy (on-device), and unit economics that scale. Pair a handful of 270M specialists with a bigger router when you truly need it.

Meta’s DINOv3: universal vision backbones

Meta released DINOv3, a family of self-supervised vision backbones (ViT + ConvNeXt) that produce high-quality dense features and, per the docs, beat specialized SOTA across a broad range of tasks without fine-tuning. Checkpoints + code are live (HF + PyTorch/Transformers), with variants trained on a large web dataset (LVD-1689M) and a satellite dataset (SAT-493M). The lineup spans from small ViTs to a ~7B-param ViT; some larger weights appear gated.

Why it matters: This is a plug-and-play backbone for enterprise CV stacks – search, inspection, mapping, robotics – where dense, high-resolution features move the needle on segmentation, detection, and retrieval without task-specific pretraining.

What I’m watching next

GPT-5 monetization surfaces: Do we see sponsored answer units or API-level ad rails appear in the wild? Was this a clever ploy to force 4o free users to convert to paid? 👀

Recruiting flow: I bet there will be more poaching drama!! Will Anthropic researchers break? Will Zuck drain OpenAI dry? Will Microsoft AI became a thing? Time shall tell!

Provenance audits: Any credible reporting that clarifies the Mistral/DeepSeek allegations. Keep me posted if you find something out!

Apple’s cadence: Concrete dev docs or SDK hints for Charismatic/voice-driven app control ahead of the hardware dates. I’VE BEEN WAITING FOR A LONG TIME THO!!!

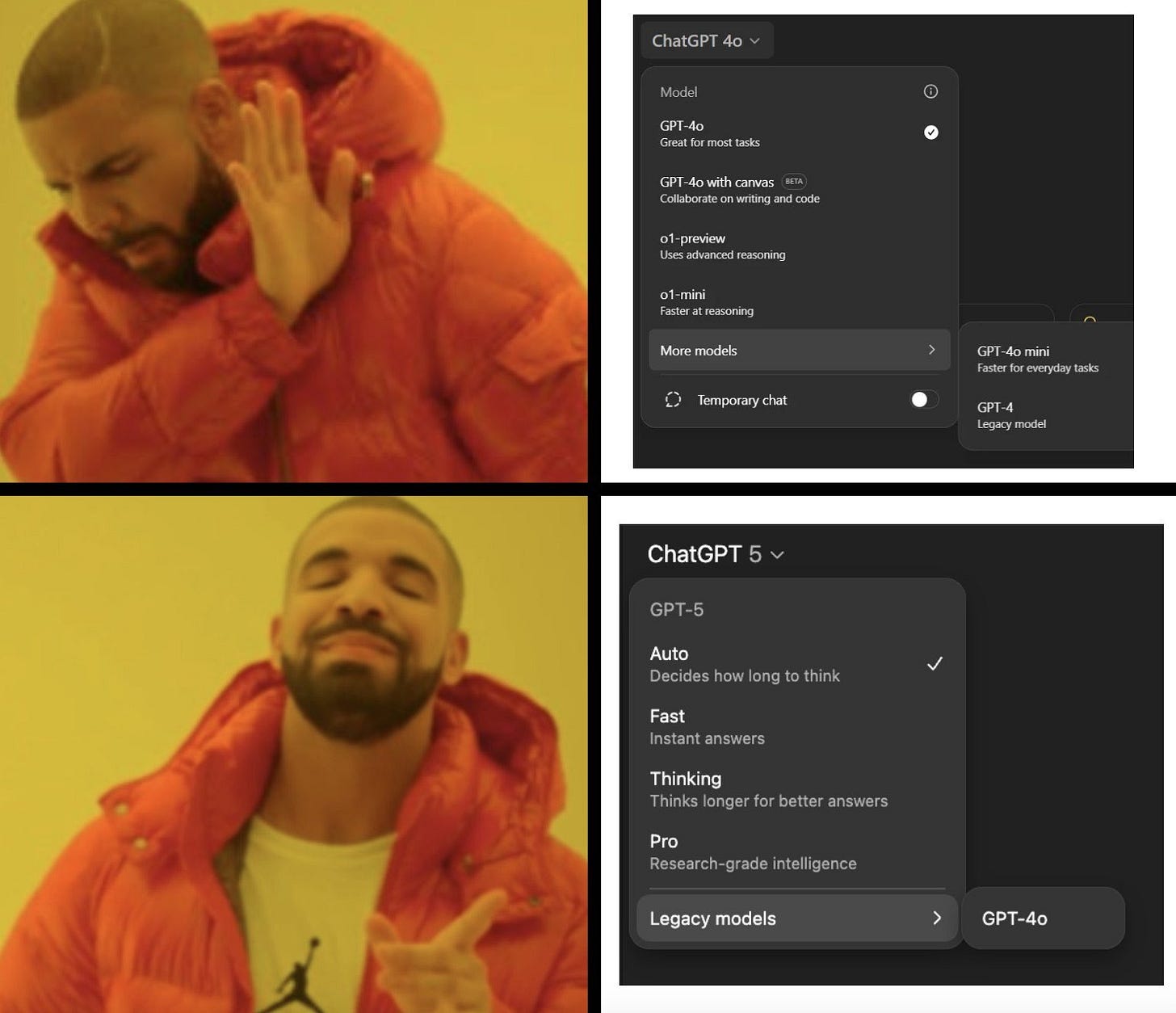

Parting Words aka Meme of the Week

Wow there were many to choose from, but the below encapsulates the absurdity of our current timeline perfectly. Meme-queen extraordinaire Olivia Moore made the best one summarizing the GPT-5 rollout week – after all the naming drama, then routing drama… looks like we’re back to a model picker anyways 😂😂 –

This was such a fun read. Never expected LLM as a judge to find a use case as conflict resolver 😂